POLS 1140

What is public opinion?

Updated Mar 9, 2025

Friday

Plan for the Today

Definitions of Public Opinion (Last Friday)

A brief history of public opinion (Last Friday)

Finish Public opinion and democratic theory (Today)

Measuring public opinion through surveys (Today)

Statistics and POLS 1140 Part III (Today)

Using polls to forecast elections (Today)

Review

On Wednesday

Reviewed some empirical challenges to the Folk Theory of Democracy – do citizens hold consistent, coherent preferences that inform politics?

We introduced the concept of sampling theory, and talked about how to quantify uncertainty via confidence intervals and hypothesis tests.

We discussed some (overly) simple heuristics for intrepreting results

- Does the confidence interval contain 0?

- Is the p-value less than some threshold (e.g. p < 0.05)

For Next Week

Reflection Papers

For an 85 take a paper on the syllabus, and summarize the following

- What’s the research question?

- What’s the theoretical framework?

- Describe the data and methods

- What are the results?

- What are the broader contributions?

For a 100, do the same for a related paper not on the syllabus.

Tip

Typically, I’ll give you a list of related articles in the slides. You might also plug the paper from the syllabus into google scholar, and search citing and related articles

Plan for the Today

Definitions of Public Opinion (Last Friday)

A brief history of public opinion (Last Friday)

Finish Public opinion and democratic theory (Today)

Statistics and POLS 1140 Part II (Today)

Measuring public opinion through surveys (Start Today)

Using polls to forecast elections (Friday)

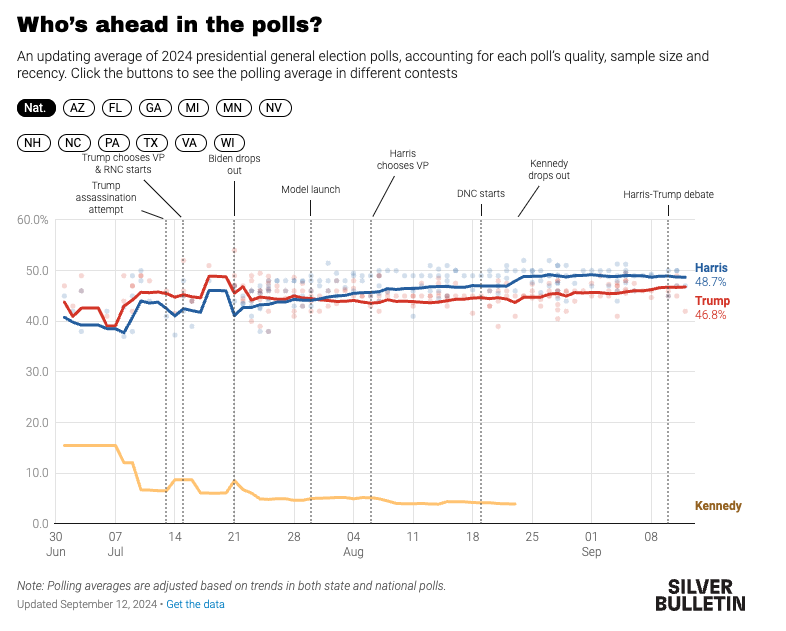

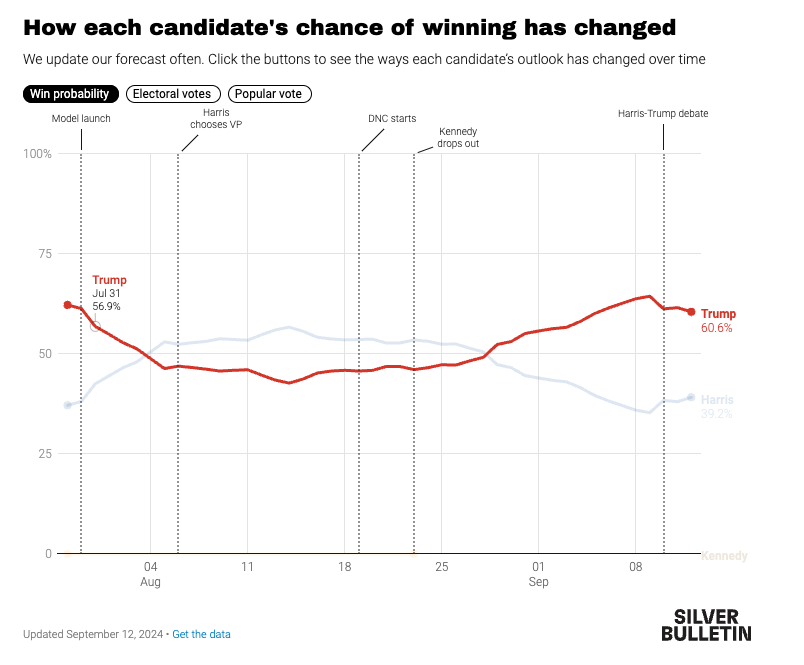

Harris-Trump Debate

Post-debate consensus is that Harris won the debate

Instant poll of debate watchers, from CNN-SSRS poll

— Manu Raju (@mkraju) September 11, 2024

Who won the debate?

Harris: 63

Trump: 37

Same group on who they expected to win the debate

Harris: 50

Trump: 50

Will it matter?

Conventional wisdom suggests debates have minimal effects. Why?

Who’s watching

- Politically interested folks who’ve probably already made up their mind

What happens after?

- Competitive information environment

- News cycles in a dynamic campaign

Evidence that debates can matter:

- Nixon-Kennedy

- Trump-Biden

More on this on Friday

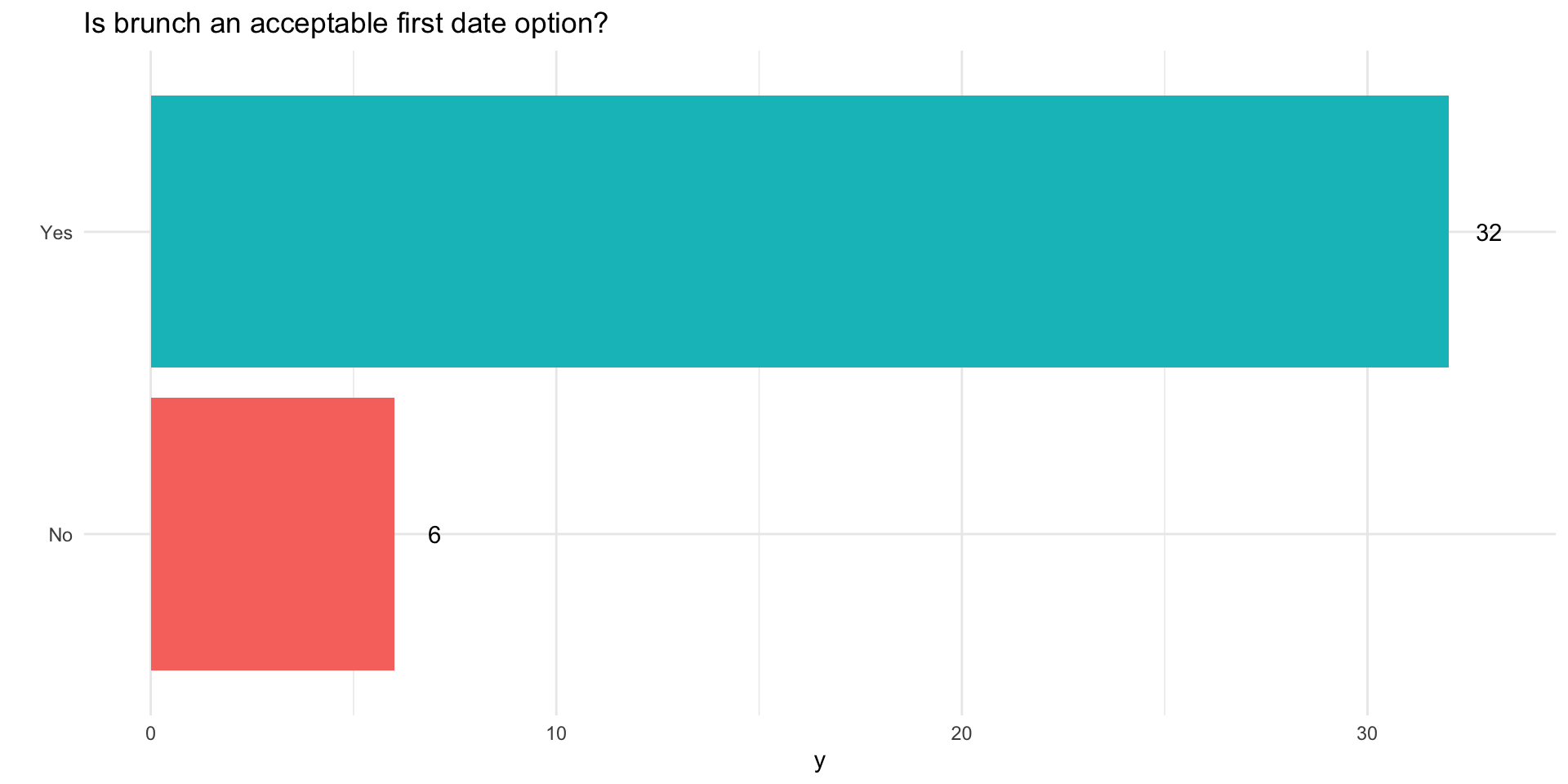

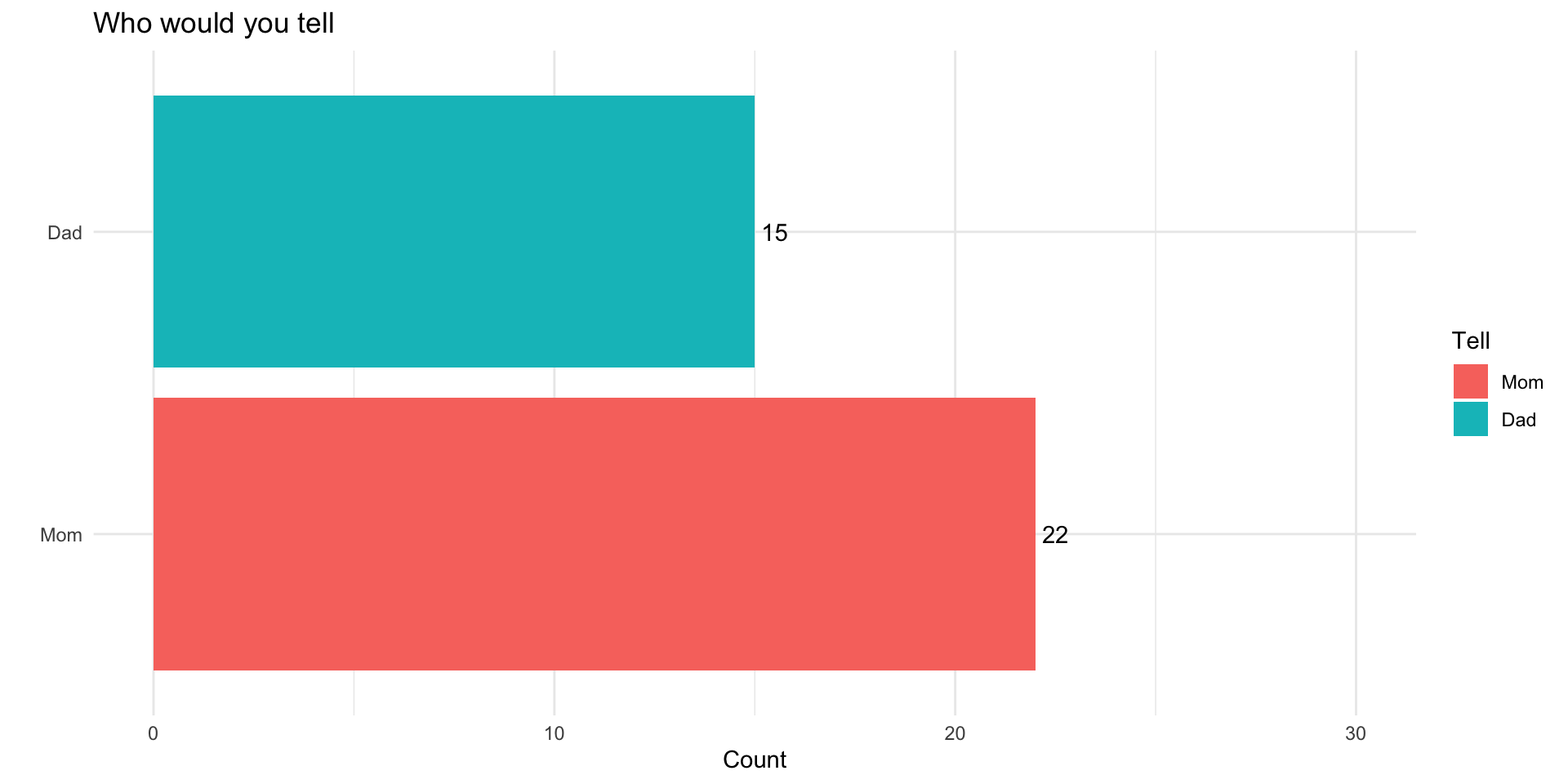

Is brunch a viable first date?

You’ve commited a murder

Why do you ask?

It’s cousin Nick’s fault…

I think it’s a funny question that reveals interesting things about our relationships with our parents

What assumptions are we making when we ask this question?

You’re not a murderer

You don’t know someone who’s committed a murder or been murdered

You’ve got a mom and dad

How might we make this question better?

- Use a screener question: “Would you feel comfortable…”

- “Pipe” in responses from a prior question : “Who are two people who raised you…”

What questions we ask and how we ask them matters

Review

Last class we discussed

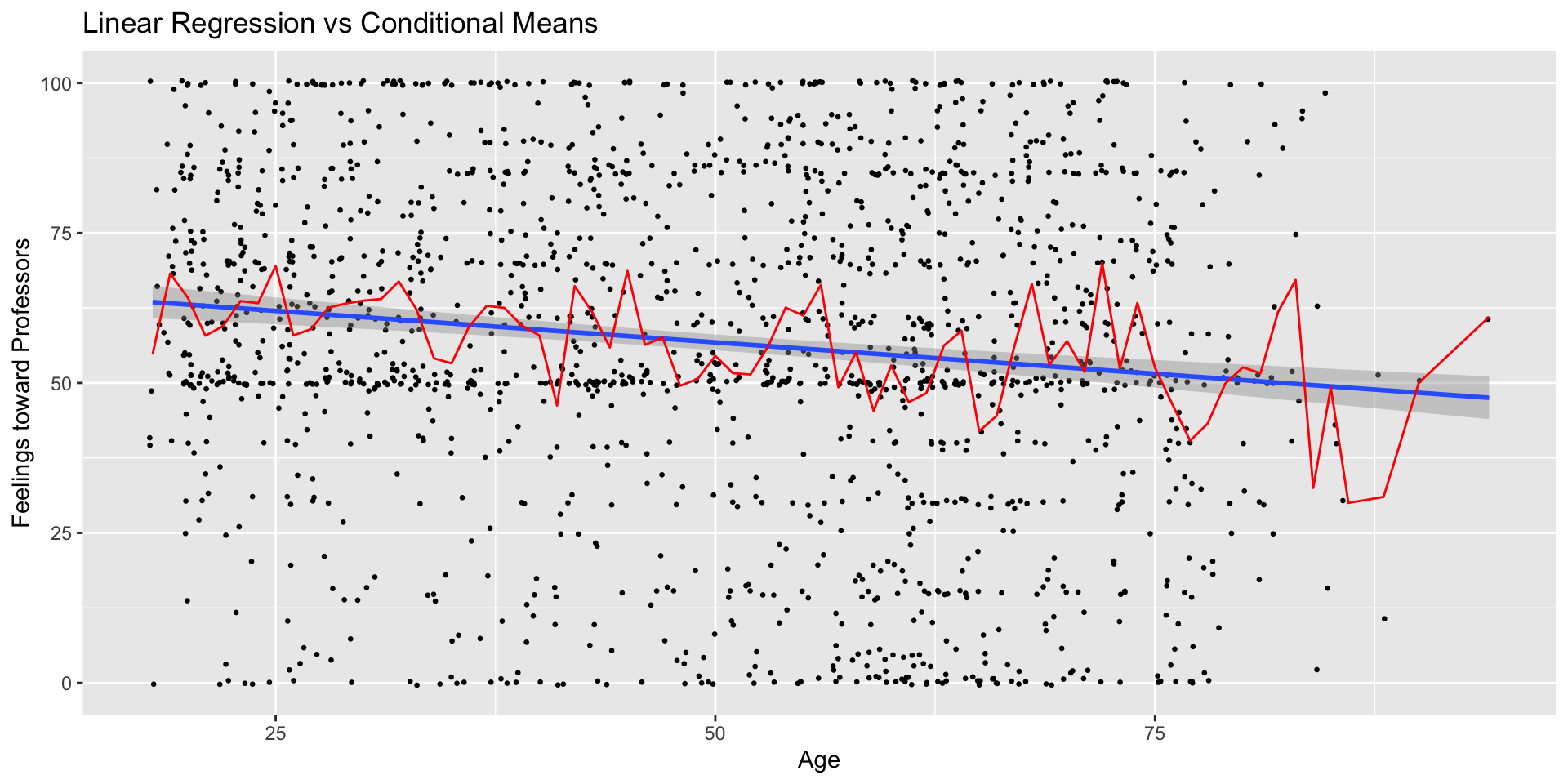

Some basic statistical concepts around linear regression

- Linear regression is tool for describing relationships

- Interpret regression models in terms of the sign, size, and significance of coefficients.

The Folk Theory of Democracy as reasonable criteria for how democracy functions and what it requires of it’s citizens

Introduced some theoretical challenges to this folk theory

- Even if citizens possessed idealized preferences, democracy would still struggle with aggregating them into policy

Statistics and POLS 1140 Part I

Describing what’s typical

What do you need to know?

Today we’ll cover some basic tools that social scientists use to make the following kinds of inferences:

Descriptive

- How do you feel about college professors

Predictive

- How do feelings about college professors change with age

Causal

- What’s the effect of having a great professor on these attitudes

A lot of statistics is about describing what’s typical.

- Mean: A typical value of some variable

- Variance How much do values vary around the mean

- Standard Deviation How much do values typically vary around the mean

- Covariance How two variables vary together

- Correlation A standardized measure of covariance

- Conditional Means The mean of a variable conditional on the values of another variable

What you need to know?

A lot of statistical modeling revolves around estimating conditional means

- How does some outcome change with some explanatory variable

Linear regression (and extensions of the linear model) are the primary tool for making

Statistical inference revolves around quantifying uncertainty about what could have happened.

- Variances, covariances, and related quantities are central to this process.

Linear regression

- Linear regression provides a linear estimate the conditional expectation function.

- The technical details are not important to this class, our goal by the end of the week will be to develop skills to interpret and critique linear models

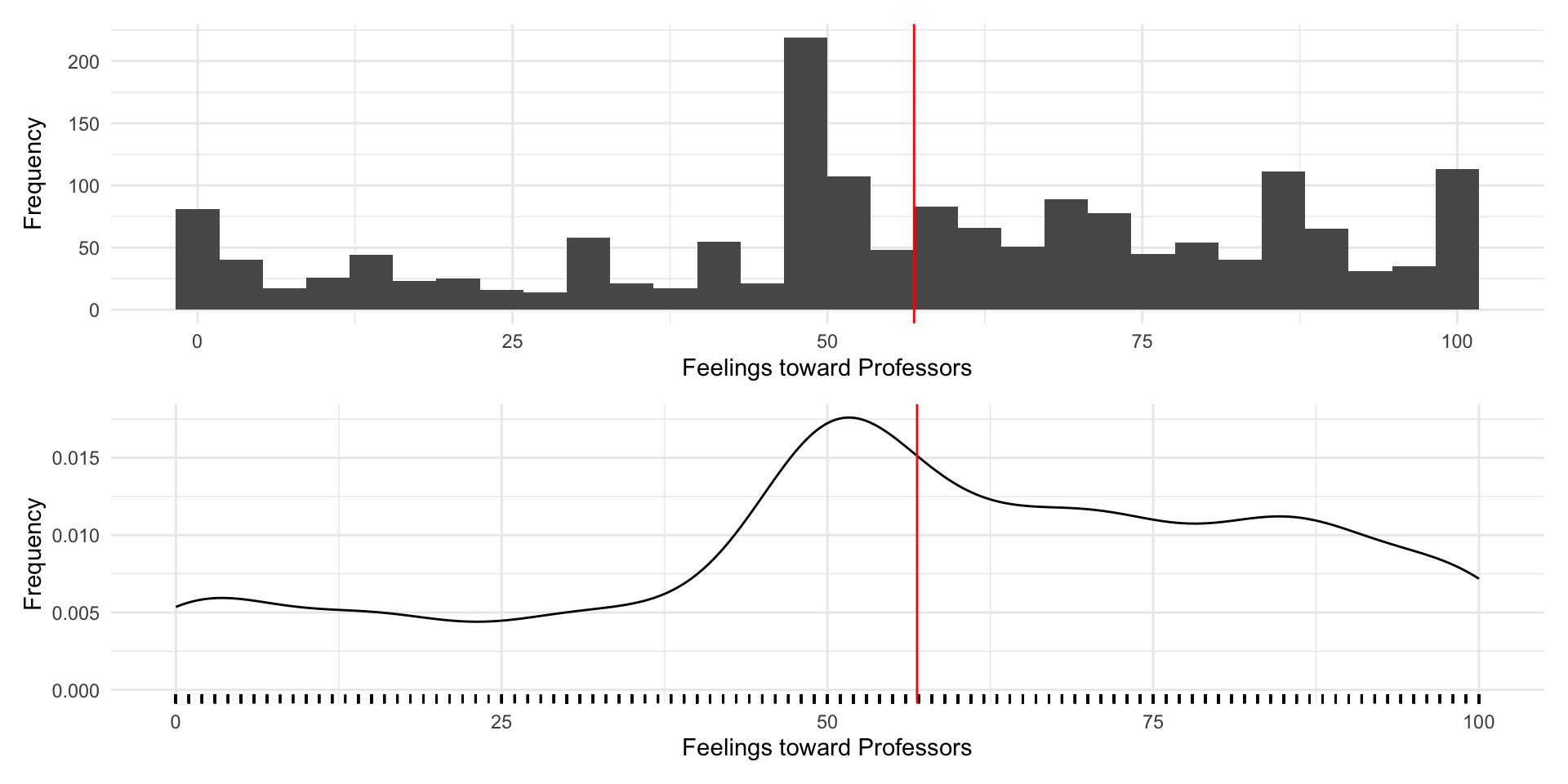

How do people feel about college professors

Let’s explore this question using data from the 2024 NES Pilot Study

Outcome: Feelings toward professors measured on 0-100 point scale

Predictors:

- Age (in years)

- Education (college degree)

What are our expectations?

| Measure | Mean |

|---|---|

| Unconditional | |

| Overall | 56.88 |

| Conditional | |

| No college degree | 53.33 |

| College degree | 61.03 |

| Under 30 | 61.95 |

| Over Thirty | 55.68 |

Now lets see how we can use regression to explore these relationships

| term | estimate | std.error | statistic | p.value | conf.low | conf.high | df | outcome |

|---|---|---|---|---|---|---|---|---|

| (Intercept) | 61.95 | 1.33 | 46.50 | 0 | 59.34 | 64.57 | 1691 | ft_professors |

| age_catOver Thirty | -6.27 | 1.54 | -4.07 | 0 | -9.30 | -3.25 | 1691 | ft_professors |

| Model 1 | Model 2 | Model 3 | Model 4 | |

|---|---|---|---|---|

| (Intercept) | 61.95*** | 67.26*** | 53.33*** | 64.35*** |

| (1.33) | (1.81) | (0.94) | (1.83) | |

| age_catOver Thirty | -6.27*** | |||

| (1.54) | ||||

| age | -0.21*** | -0.23*** | ||

| (0.04) | (0.04) | |||

| has_degreeCollege degree | 7.70*** | 8.36*** | ||

| (1.34) | (1.34) | |||

| R2 | 0.01 | 0.02 | 0.02 | 0.04 |

| Adj. R2 | 0.01 | 0.02 | 0.02 | 0.04 |

| Num. obs. | 1693 | 1693 | 1693 | 1693 |

| RMSE | 27.80 | 27.65 | 27.64 | 27.35 |

| ***p < 0.001; **p < 0.01; *p < 0.05 | ||||

Summary

- Statistics is about describing what’s typical

- What’s a typical value

- What’s a typical amount of variation

- How does an outcome typically vary with a predictor

- Regression is a tool for providing linear estimates of conditional means

- A way of fitting lines to data

- A way of partitioning variance

- We interpret regression coefficients in terms of their

- sign (positive or negative)

- size (substantively meaningful)

- statistical significance (more later)

Definitions of public opinion

How do you define public opinion

Take a few minutes to write down your own definition of public opinion.

Now take a few minutes to share your definitions with the person next to you

Five Definitions

Five definitions from Glynn et al. (2015)

Aggregation beliefs

Public vs private

Political conflict

Elite vs mass

Lies! Damn lies!

1. Public opinion is an aggregation of individual opinions

“Polling is merely an instrument for gauging public opinion. When a President, or any other leader, pays attention to poll results, he is, in effect, paying attention to the views of the people. Any other interpretation is nonsense.” – George Gallup (1972)

2. Public opinion is a reflection of majority beliefs

“Opinions on controversial issues that one can express in public without isolating oneself”– Elisabeth Noelle-Neumann (1984)

3. Public opinion is found in the conflict of group interests

“The people are involved in public affairs by the conflict system. Conflicts open up questions for public intervention.” – E.E. Schattschneider (1960)

4. Public opinion is simply a reflection of elite influence

“The voice of the people is but an echo. The output of an echo chamber bears an inevitable and invariable relation to the input. As candidates and parties clamor for attention and vie for popular support, the people’s verdict can be no more than a selective reflection from the alternatives and outlooks presented to them.” - V.O. Key (1968)

5. Public opinion does not exist

Bourdieu (1972) argues polls assume

- Everyone can have an opinion

- All opinions are equally valid

- We all agree questions worth asking

Polling thus represents and reconstructs political interests

So what’s the right definition?

None of them

All of them

It depends on the question you’re asking

Each definition has strengths and weaknesses

What are the ways we could study public opinion?

Let’s take a few minutes to write down the different ways we could study public opinion

Think about the places, venues, methods, and results of these approaches

Are some more suited for some definitions of public opinion than others?

Ok let’s share our responses

- How many people wrote down something involving polls and surveys?

- How many people wrote down something else?

A brief history of public opinion

Some caveats

I am not a historian

This is a very abridged and largely western history

Provide a broad overview that introduces recurrent themes

Claims

Public opinion is a reflection of the “public sphere”

Public opinion is a contextual and a function of politics, society, technology and ???

Debates about public opinion and its role in society are persistent

The formal study of public opinion as we know it, is more recent.

Early examples of public opinion

Some early debates about public opinion

“In the same way, when there are many, each can bring his share of goodness and moral prudence; and when all meet together, the people may thus become something in the nature of a single person who – as he has many feet, many hands and many senses – may also have many qualities of character and intelligence” - Aristotle (Politics)

Some early debates about public opinion

“Then, my friend, we must not regard what the many say of us; but what he, the one man who has understanding of just and unjust, will say, and what the truth will say.” Plato (The Crito)

Some early debates about public opinion

“Men are so simple, and governed so absolutely by their present needs, that he who wishes to deceive will never fail in finding willing dupes” – Machiavelli

Early Technologies of Public Opinion

Oration and rhetoric

Mass demonstration

The written word

Some important “Revolutions” In Public Opinion

- Philosophical

- Social Contract Theory

- Utilitarianism

- Political

- Democratic revolutions

- Expansions of suffrage and political rights

- Progressive reforms like direct democracy

- Socio-Cultural:

- Economic changes

- Public spaces

- Nature and means of communication

The First Straw Polls

Proto-polls emerge around the 1824 Election

- Emerge in response to failures of state caucus to produce clear nominee

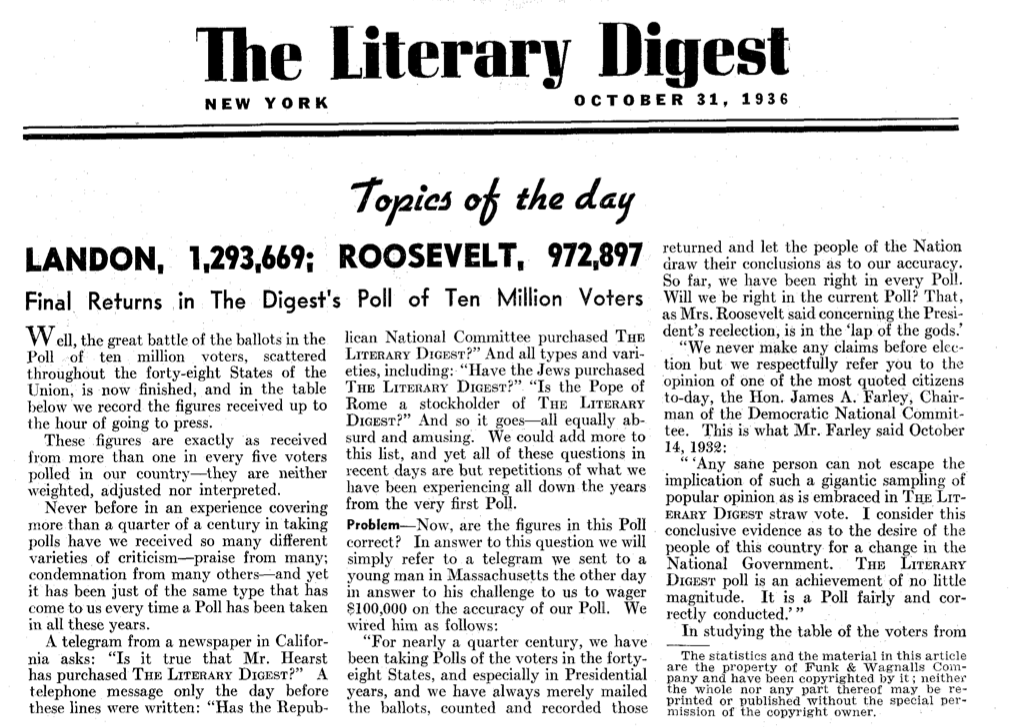

The Literary Digest Pools

Began polling readership in 1916

Correctly called, Wilson, Harding, Coolidge, Hoover, and Roosevelt in 1932…

But wildly off in 1936…

Three Eras of Survey Research (Groves 2011)

- Birth (1930-1960)

- Expansion (1960-1990)

- Adaption (1990-present)

Birth (1930-1960)

Advances in statistical theory provide foundation for probability-based sampling

Birth of modern polling firms like Gallup and Roper, with a keen interest on politics in general, and elecitons in partiuclar

Expansion (1960-1990)

Polling becomes ubiquitous as advances in technology (telephones, computers) lower costs

Most surveys have high responses

Two key schools of thought in political science:

- The Columbia School

- The Michigan School

The Colubmia School

Concerned with how individuals are infleunce by the media

Sociological studies based in specific localities (Sandusky, OH, Elmira, NY; Decatur IL)

Propose a two-step flow of communication, where information from the media is filtered through “opinion leaders”

Personal Influence and the two-step flow of communication

The Michigan School

Concerned with how people make political decisions

Based off of surveys that would become the American National Election Studies

Most people cast their votes on the basis of partisan identifications largely inherited from their families

Adaption (1990-present)

Declining response rates

The internet and the return of non-probability samples

New sources of data and new tools of analysis

Summary

Debates about public opinion are longstanding

Changes in the public sphere change our conceptions of public opinion

Need to be clear about our questions of interest and the contexts in which we’re studying them

Class Survey

Public opinion and democratic theory

The Folk Theory of Democracy

What is Democracy?

Dahl 1998 lays out some general requirements for democracy:

Effective participation people have to have an opportunity to express their preferences

Voting equality votes should count equally

Enlightened understanding people should understand alternatives

Control of the agenda people can bring new items to the agenda; can’t rig the rules of the game

Inclusion of adults not just propertied, white dudes

The Folk Theory of Democracy

Bartels and Achen argue if democracy “begins with voters” then, these voters:

Have genuine opinions on policies

Take time to form those opinions

Elect politicians to represent those opinions

Those politicians then do as they’re told

Chapter 2 contrasts these “democratic ideals” with “dreary” empirical realities

Two Models of Democratic Theory

- Populism

- Democracy is about translating the will of the people into action

- Leadership selection

- Democracy is about selecting good leaders

How might these models work in practice?

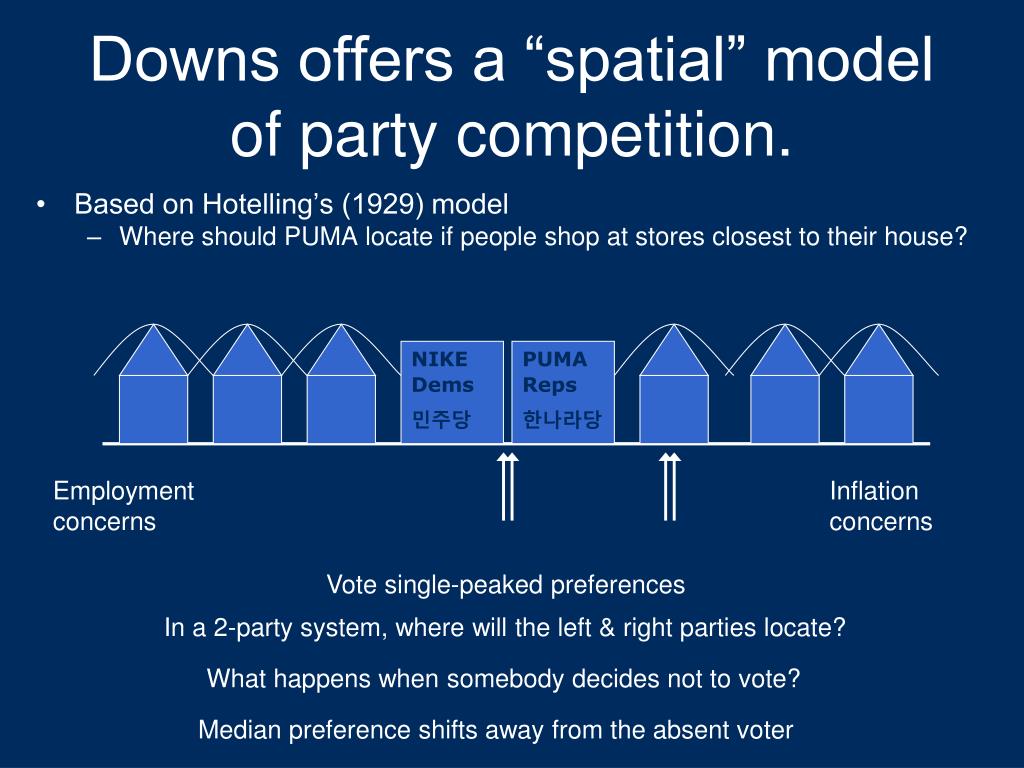

Spatial Models of Voting

Represent voters preferences as “ideal points” on a ideological spectrum

For a two party system, with first past the post elections, parties will converge on the preferences of the median voter.

Seemed empirically true in the 1950s/60s

Spatial Models of Voting: The Median Voter Theorem

Source: James Vreeland

Limits of the Spatial Model

- Theoretical

- Condorcet’s paradox

- Arrow’s Impossibility Theorem

- Empirical

- Are preferences really unidimensional?

- Are preferences politically meaningful?

- Are preferences reflected in policy

Condorcet’s Paradox: British Preferences for Brexit

- A > B: Remain vs May Deal (Soft exit) -> Remain wins

- B > C: May Deal (Soft exit) vs No Deal (Hard exit) -> May Deal wins

- C > A: Remain vs No Deal (Hard exit) -> No Deal wins

Voting Cycles

Voting cycles – where the order of conisderation influences the outcome – are one example of the logical challenges for the folk theory of democracy

Arrow’s Impossibility Theorem

The problem of voting cycles – any outcome is possible depending on the order of consideration – is one example of a more general challenge of aggregating preferences

Arrow (1950) given some reasonable criteria for fairness:

- No dictators, Universality, IIA, Monotonicity, Sovereignty

No rank-order electoral system (e.g. Plurarlity, Instant Run Off, Borda) can be designed that always satisfies all theses criteria

Limits of the Spatial Model

- Theoretical

- Condorcet’s paradox

- Arrow’s Impossibility Theorem

- Empirical

- Are preferences really unidimensional?

- Are preferences politically meaningful?

- Are preferences represented politically?

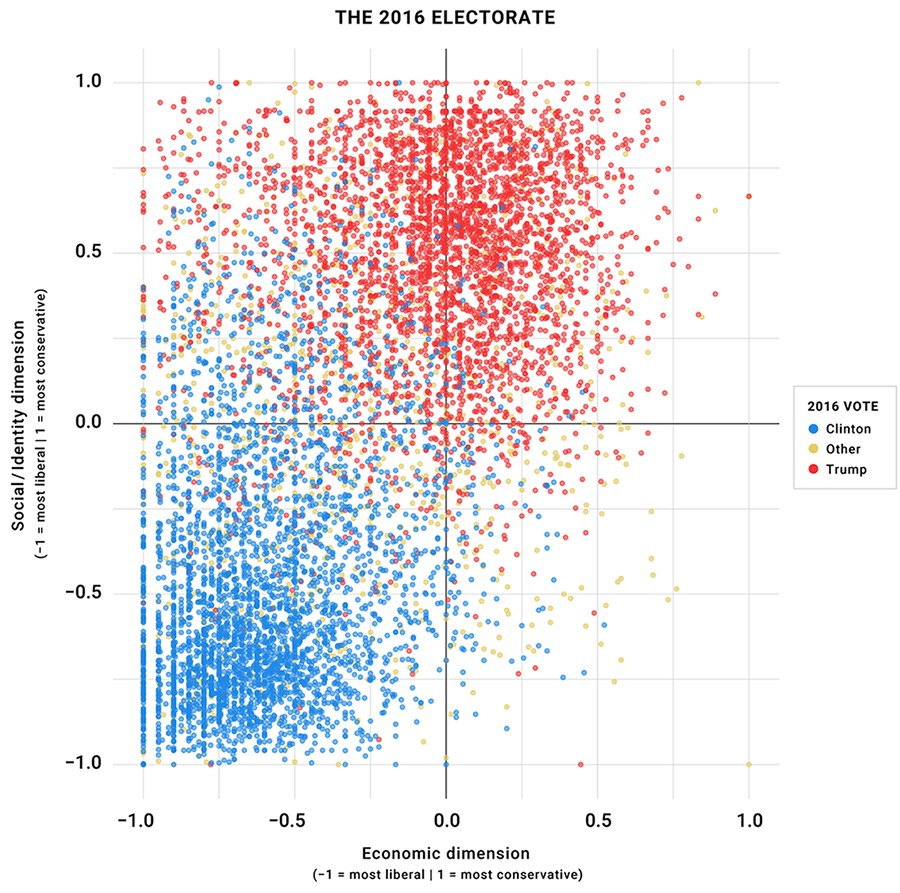

Are preferences unidimensional?

- The media voter theorem relies depends on nicely behaved preferences (p. 26)

- one dimension

- single peaked

- If we allow for multiple dimensions, this stable equilibrium vanishes

Are preferences politically meaningful?

- Do people have cleaer issues preferences?

- Do people think in ideological terms?

- Are people’s attitudes consistent over time?

- Do these preferences influence voting

Framing Effects:

For example, 63% to 65% of Americans in the mid-1980s said that the federal government was spending too little on “assistance to the poor”; but only 20% to 25% said that it was spending too little on “welfare” (Rasinski 1989, 391)

Converse (1964) finds:

“[A]bout 3% of voters were clearly classifiable as “ideologues,” with another 12% qualifying as “near-ideologues”; the vast majority of voters (and an even larger proportion of nonvoters) seemed to think about parties and candidates in terms of group interests or the “nature of the times,” or in ways that conveyed “no shred of policy significance whatever”

And only modest correlations across similar issue positions

Again from Converse (1964):

Successive responses to the same questions turned out to be remarkably inconsistent. The correlation coefficients measuring the temporal stability of responses for any given issue from one interview to the next ranged from a bit less than .50 down to a bit less than .30, suggesting that issue views are “extremely labile for individuals over time”

- Causal ambiguity on issue voting (p. 42). Could be:

- issue voting (issues inform vote choice)

- persuasion (candidate positions change issue preferences)

- projection (issue preferences influence candidate perceptions)

The “Miracle” of Aggregation

Even if individuals citizens are “rational ignorant” holding incoherent positions that are easily swayed by things like question wording, perhaps democracy can still function in the aggregate

Achen and Bartels argue this is also not likely to be the case

Condorcet’s jury theorem: As long as voters have a better than than average chance of making the right choice (p > 0.5), with enough voters, society will tend to make the right decision

- Lau and Redlawsk (2006) “found that about 70% of voters, on average, chose the candidate who best matched their own expressed preferences”

Achen and Bartels: Only works if the errors are independent

When thousands or millions of voters misconstrue the same relevant fact or are swayed by the same vivid campaign ad, no amount of aggregation will produce the requisite miracle; individual voters’ “errors” will not cancel out in the overall election outcome (p. 41)

Achen and Bartels discuss a range of research suggesting:

- Levels of political knowledge tend to be low (p. 37)

most people “know jaw-droppingly little about politics.”

Information shortcuts (cues and heuristics) can be unreliable (p. 39)

“Fully informed” preferences differ markedly actual preferences (p. 40)

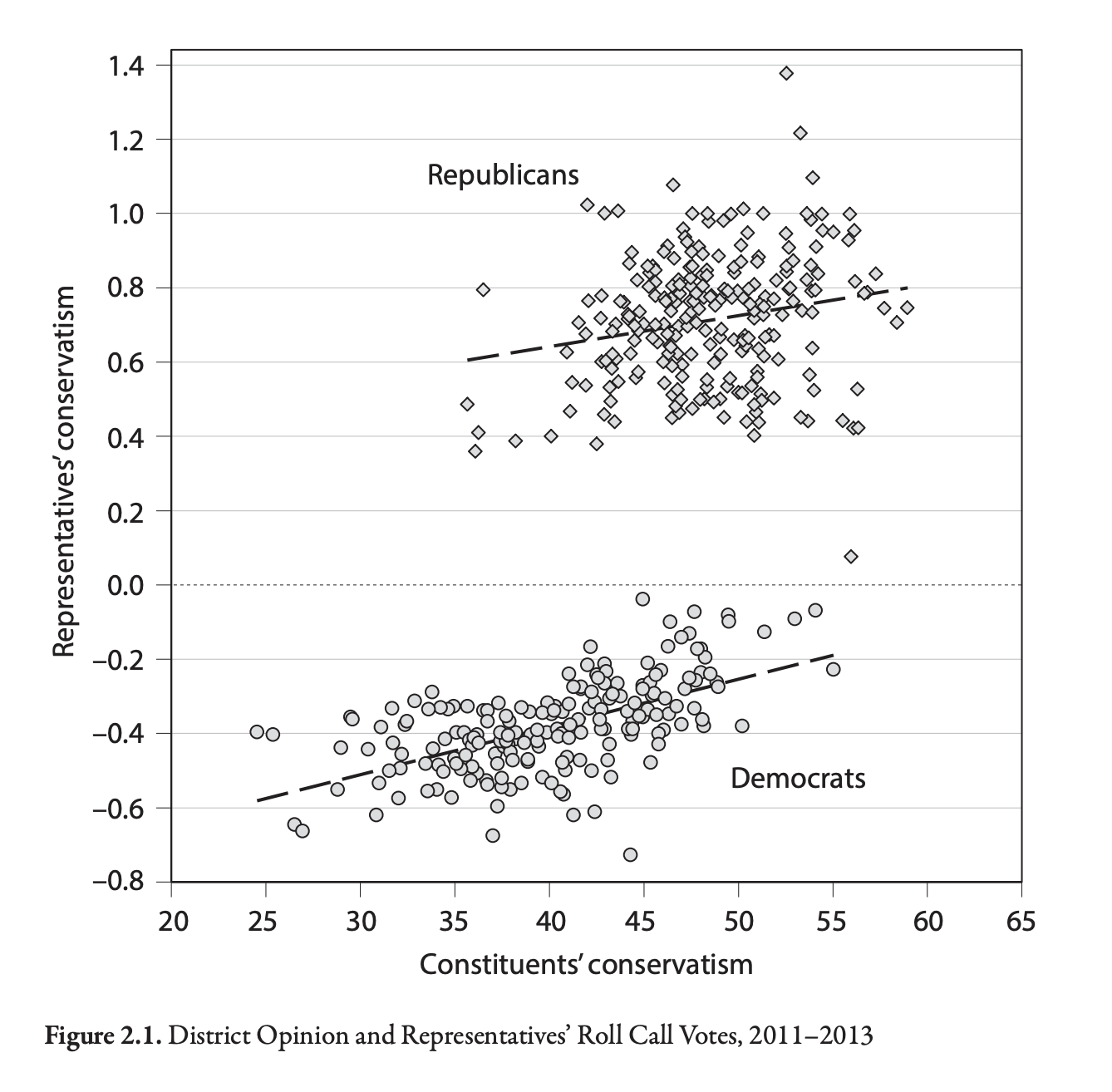

Are preferences represented by politicians

What does Figure 2.1 show?

What would the Folk Theory predict?

Statistics and POLS 1140 Part II

Inference and Uncertainty

Statistical inference involves quantifying uncertainty about what could have happened.

Today, we’ll introduce the concepts of:

Samples and Populations

Confidence Intervals and Hypotheses Tests

There is more content here than we’ll discuss in class.

You don’t need to know how to conduct a hypothesis test or construct a confidence interval

You do need a functional understanding about how to use these tools to understand claims about statistical significance

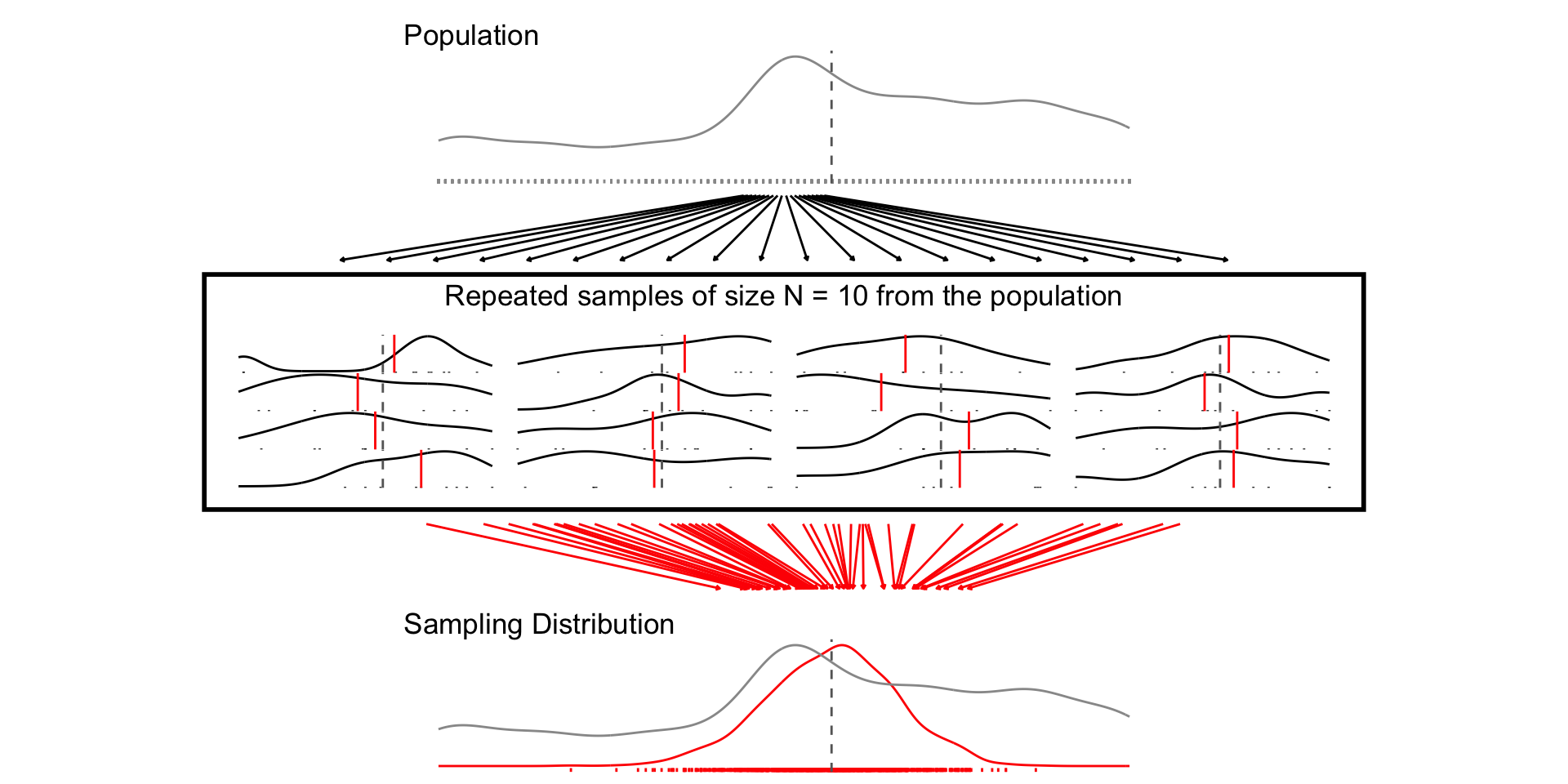

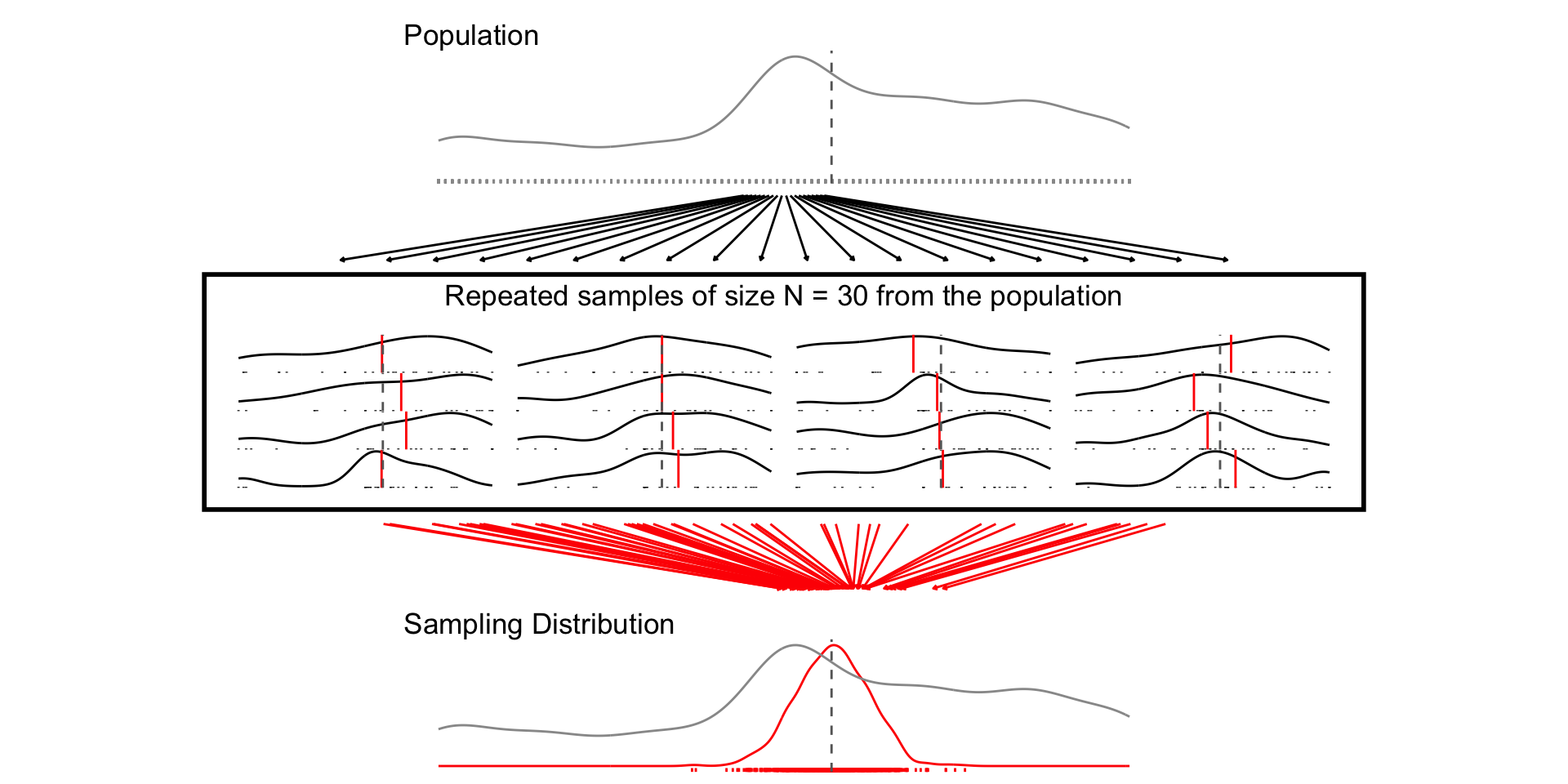

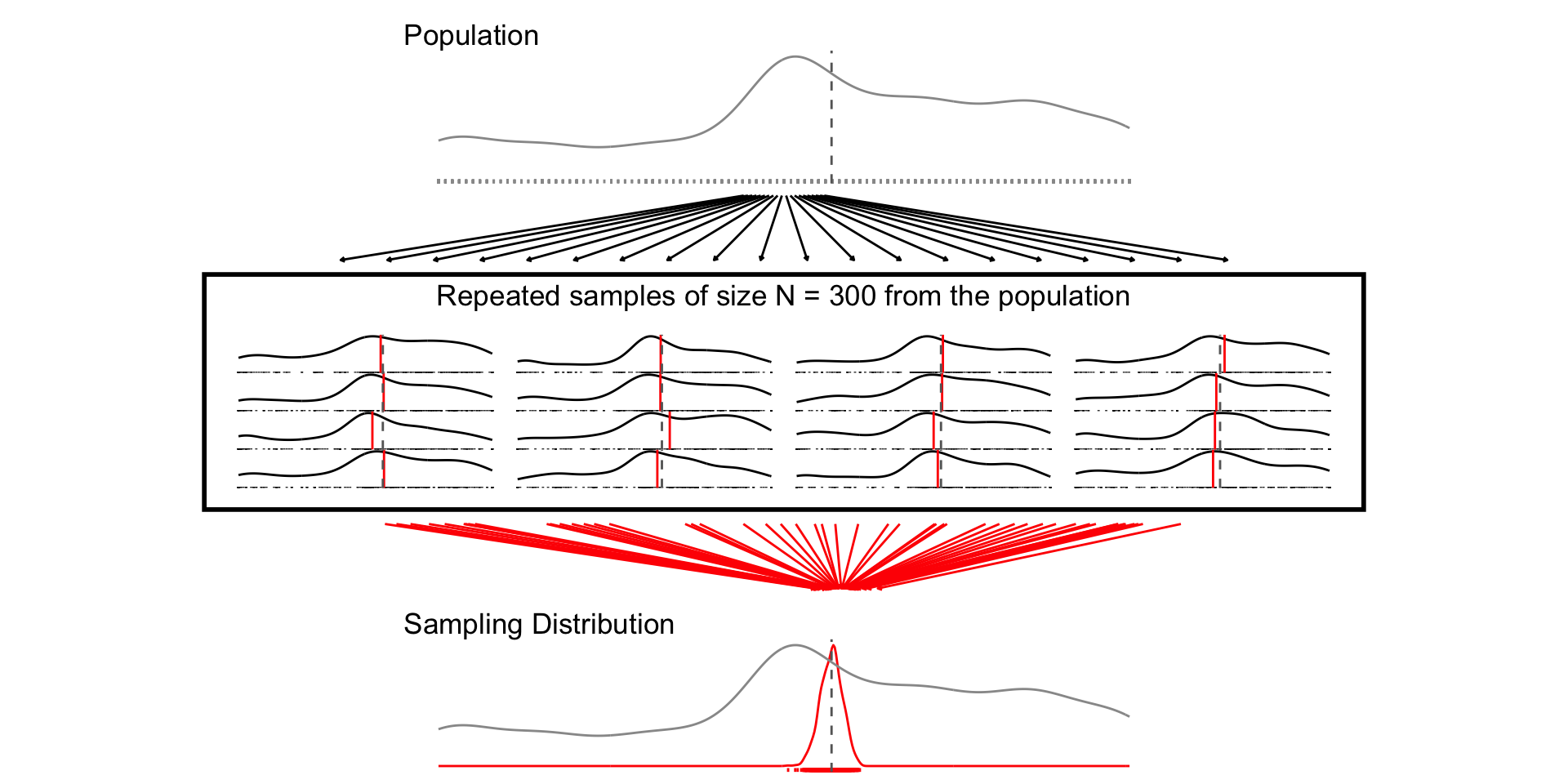

Sampling distributions

When we conduct a survey we are trying to learn about a population by generalizing from a specific sample

What would have happened if we had a different sample? How different would our result be?

Let’s treat the 2024 NES pilot as the population

Take repeated samples of size N = 10, 30, 300

For each sample of size N, calculate the sample mean of

feelings torward professorsPlot the distribution of sample means (i.e. the sampling distribution)

# Load Data

# load(url("https://pols1600.paultesta.org/files/data/nes24.rda"))

# ---- Population ----

# Population average

mu_prof <- mean(df$ft_professors, na.rm=T)

# Population standard deviation

sd_prof <- sd(df$ft_professors, na.rm = T)

# ---- Function to Take Repeated Samples From Data ----

sample_data_fn <- function(

dat=df, var=ft_professors, samps=1000, sample_size=10,

resample = F){

if(resample == F){

df <- tibble(

sim = 1:samps,

distribution = "Sampling",

size = sample_size,

sample_from = "Population",

pop_mean = dat %>% pull(!!enquo(var)) %>% mean(., na.rm=T),

pop_sd = dat %>% pull(!!enquo(var)) %>% sd(., na.rm=T),

se_asymp = pop_sd / sqrt(size),

ll_asymp = pop_mean - 1.96*se_asymp,

ul_asymp = pop_mean + 1.96*se_asymp,

) %>%

mutate(

sample = purrr::map(sim, ~ slice_sample(dat %>% select(!!enquo(var)), n = sample_size, replace = F)),

sample_mean = purrr::map_dbl(sample, \(x) x %>% pull(!!enquo(var)) %>% mean(.,na.rm=T)),

ll = sample_mean - 1.96*sd(sample_mean),

ul = sample_mean + 1.96*sd(sample_mean)

)

}

if(resample == T){

df <- tibble(

sim = 1:samps,

distribution = "Resampling",

size = sample_size,

sample_from = "Sample",

pop_mean = dat %>% pull(!!enquo(var)) %>% mean(., na.rm=T),

pop_sd = dat %>% pull(!!enquo(var)) %>% sd(., na.rm=T),

se_asymp = pop_sd / sqrt(size),

ll_asymp = pop_mean - 1.96*se_asymp,

ul_asymp = pop_mean + 1.96*se_asymp,

) %>%

mutate(

sample = purrr::map(sim, ~ slice_sample(dat %>% select(!!enquo(var)), n = sample_size, replace = T)),

sample_mean = purrr::map_dbl(sample, \(x) x %>% pull(!!enquo(var)) %>% mean(.,na.rm=T))

)

}

return(df)

}

# ---- Plot Single Distribution -----

plot_distribution <- function(the_pop,the_samp, the_var, ...){

mu_pop <- the_pop %>% pull(!!enquo(the_var)) %>% mean(., na.rm=T)

mu_samp <- the_samp %>% pull(!!enquo(the_var)) %>% mean(., na.rm=T)

ll <- the_pop %>% pull(!!enquo(the_var)) %>% as.numeric() %>% min(., na.rm=T)

ul <- the_pop %>% pull(!!enquo(the_var)) %>% as.numeric() %>% max(., na.rm=T)

p<- the_samp %>%

ggplot(aes(!!enquo(the_var)))+

geom_density()+

geom_rug()+

theme_void()+

geom_vline(xintercept = mu_samp, col = "red")+

geom_vline(xintercept = mu_pop, col = "grey40",linetype = "dashed")+

xlim(ll,ul)

return(p)

}

# ---- Plot multiple distributions ----

plot_samples <- function(pop, x, variable,n_rows = 4, ...){

sample_plots <- x$sample[1:(4*n_rows)] %>%

purrr::map( \(x) plot_distribution(the_pop=pop, the_samp = x,

the_var = !!enquo(variable)))

p <- wrap_elements(wrap_plots(sample_plots[1:(4*n_rows)], ncol=4))

return(p)

}

# ---- Plot Combined Figure ----

plot_figure_fn <- function(

d=df,

v=age,

sim=1000,

size=10,

rows = 4){

# Population average

mu <- d %>% pull(!!enquo(v)) %>% mean(., na.rm=T)

sd <- d %>% pull(!!enquo(v)) %>% sd(., na.rm=T)

se <- sd/sqrt(size)

# Range

ll <- d %>% pull(!!enquo(v)) %>% as.numeric() %>% min(., na.rm=T)

ul <- d %>% pull(!!enquo(v)) %>% as.numeric() %>% max(., na.rm=T)

# Population standard deviation

# Sample data

samp_df <- sample_data_fn(dat=d, var = !!enquo(v), samps = sim, sample_size = size)

# Plot Population

p_pop <- d %>%

ggplot(aes(!!enquo(v)))+

geom_density(col ="grey60")+

geom_rug(col = "grey60", )+

geom_vline(xintercept = mu, col="grey40", linetype="dashed")+

theme_void()+

labs(title ="Population")+

xlim(ll,ul)+

theme(plot.title = element_text(hjust = 0))

p_samps <- plot_samples(pop=d, x= samp_df,variable = !!enquo(v),

n_rows = rows)

p_samps <- p_samps +

ggtitle(paste("Repeated samples of size N =",size,"from the population"))+

theme(plot.title = element_text(hjust = 0.5),

plot.background = element_rect(

fill = NA, colour = 'black', linewidth = 2)

)

p_dist <- samp_df %>%

ggplot(aes(sample_mean))+

geom_density(col="red",aes(y= after_stat(ndensity)))+

geom_rug(col="red")+

geom_density(data = df, aes(!!enquo(v), y= after_stat(ndensity)),

col="grey60")+

geom_vline(xintercept = mu, col="grey40", linetype="dashed")+

xlim(ll,ul)+

theme_void()+

labs(

title = "Sampling Distribution"

)+ theme(plot.title = element_text(hjust = 0))

range_upper_df <- tibble(

x = seq( ((ll+ul)/2 -5), ((ll+ul)/2 +5), length.out = 20),

xend = seq(ll-5, ul+5, length.out = 20),

y = rep(9, 20),

yend = rep(1, 20)

)

p_upper <- range_upper_df %>%

ggplot(aes(x=x, xend = xend, y=y,yend=yend))+

geom_segment(

arrow = arrow(length = unit(0.05, "npc"))

)+

theme_void()+

coord_fixed(ylim=c(0,10),

xlim =c(ll-5,ul+5),clip="off")

# Lower

range_df <- samp_df %>%

summarise(

min = min(sample_mean),

max = max(sample_mean),

mean = mean(sample_mean)

)

plot_df <- tibble(

id = 1:50,

# x = sort(rnorm(50, mu, sd)),

x = sort(runif(50, ll, ul)),

xend = sort(rnorm(50, mu, se)),

y = 9,

yend = 1

)

p_lower <- plot_df %>%

ggplot(aes(x,y, group =id))+

geom_segment(aes(xend=xend, yend=yend),

col = "red",arrow = arrow(length = unit(0.05, "npc"))

)+

theme_void()+

coord_fixed(ylim=c(0,10),xlim = c(ll,ul),clip="off")

design <-"##AAAA##

##AAAA##

##AAAA##

BBBBBBBB

BBBBBBBB

#CCCCCC#

#CCCCCC#

#CCCCCC#

#CCCCCC#

DDDDDDDD

DDDDDDDD

##EEEE##

##EEEE##

##EEEE##"

fig <- p_pop / p_upper / p_samps / p_lower / p_dist +

plot_layout(design = design)

return(fig)

}

# ---- Samples and Figures Varying Sample Size ----

## N = 10

set.seed(1234)

samp_n10 <- sample_data_fn(sample_size = 10, samps = 1000)

set.seed(1234)

fig_n10 <- plot_figure_fn(v=ft_professors,size = 10)

## N = 30

set.seed(1234)

samp_n30 <- sample_data_fn(sample_size = 30, samps = 1000)

set.seed(1234)

fig_n30 <- plot_figure_fn(v=ft_professors,size = 30,rows=4)

## N = 300

set.seed(1234)

samp_n300 <- sample_data_fn(sample_size = 300, samps = 1000)

set.seed(1234)

fig_n300 <- plot_figure_fn(v=ft_professors,size = 300)

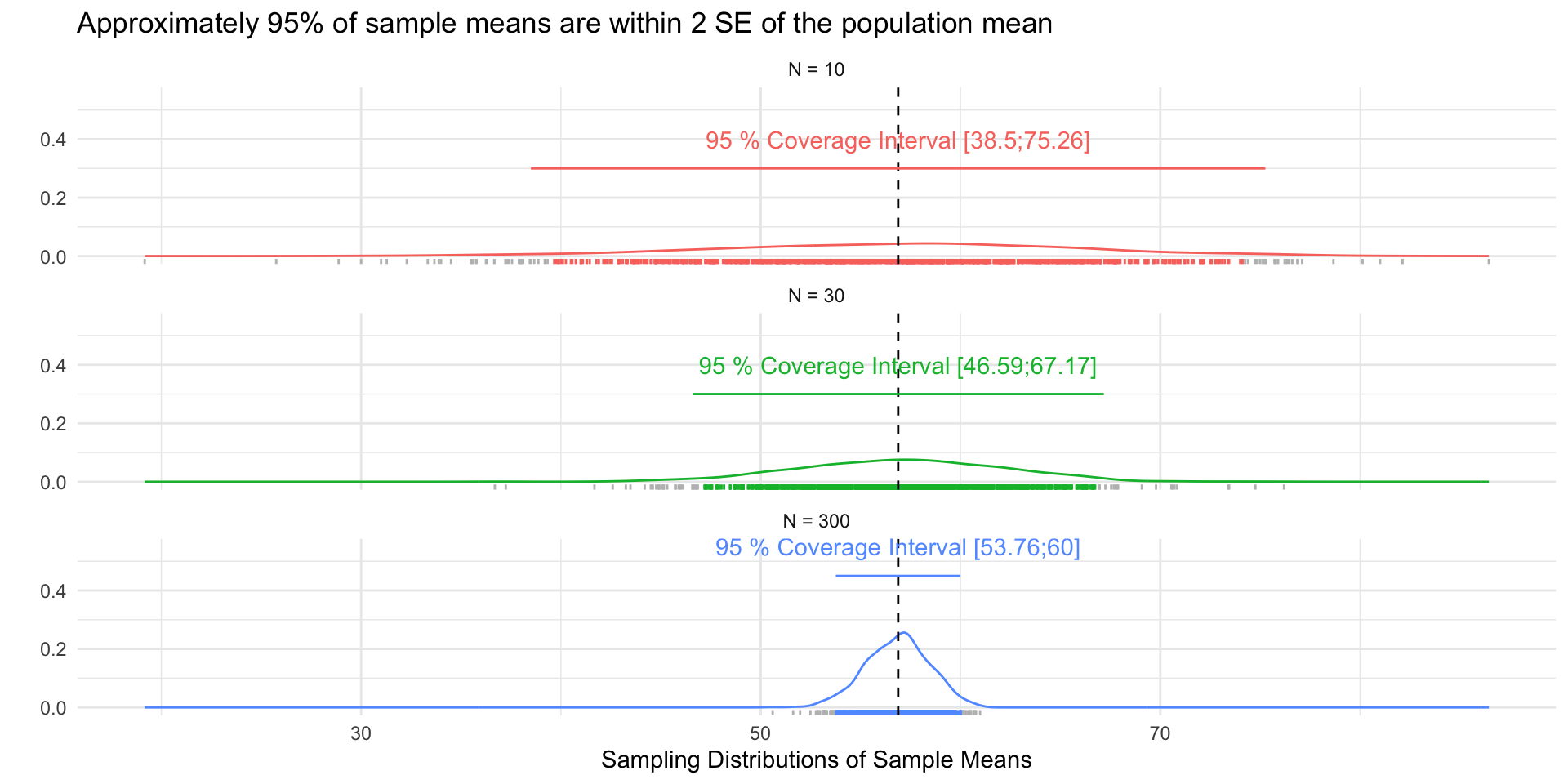

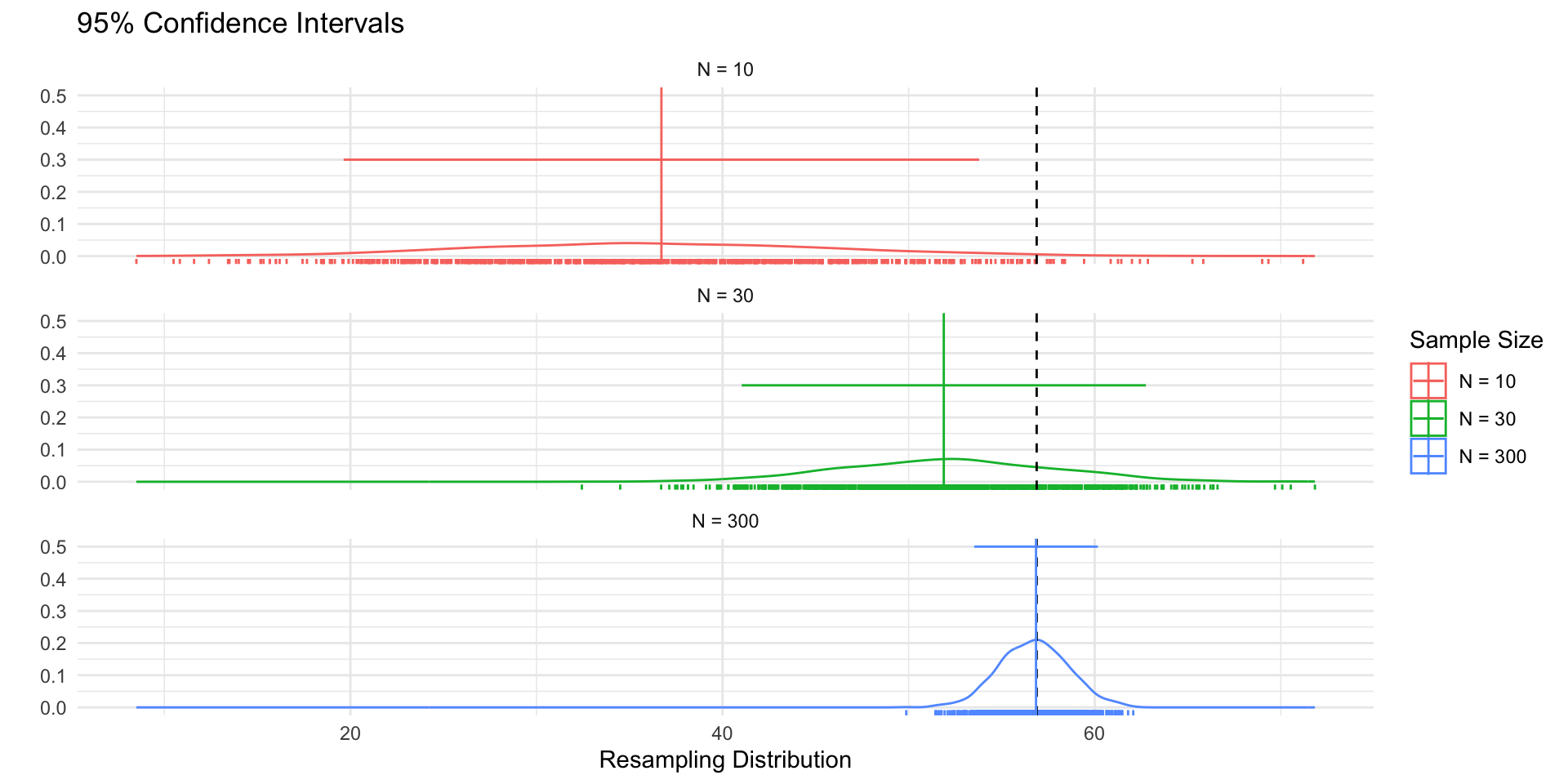

Random sampling ensures the sampling distribution is centered around the population value (unbiased estimator)

As the sample sample size increases:

The width of the sampling distribution decreases (Law of Large Numbers)

The shape of the sampling distribution approximates a Normal distribution (Central Limit Theorem)

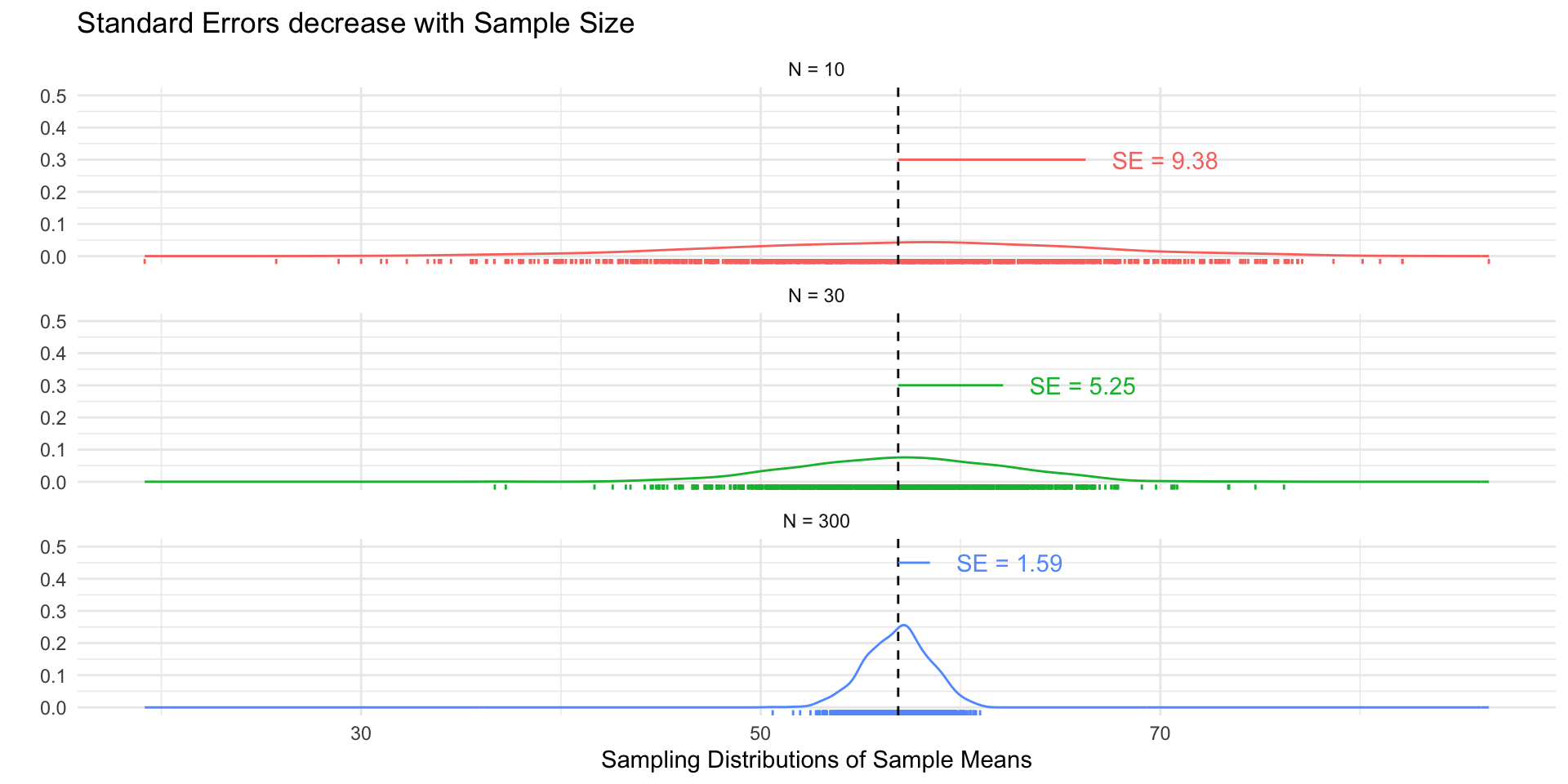

Standard errors

The standard error (SE) is simply the standard deviation of the sampling distribution.

The SE decreases as the sample size increases (by the LLN):

Approximately 95% of the sample means will be within 2 SEs of the population mean (CLT)

se_df <- tibble(

`Sample Size` = factor(paste("N =",c(10,30, 300))),

se = c(sd(samp_n10$sample_mean),

sd(samp_n30$sample_mean),

sd(samp_n300$sample_mean)),

SE = paste("SE =", round(se,2)),

ll = mu_prof,

ul = mu_prof + se,

y = c(.3,.3,.45),

yend = y

)

ci_df <- tibble(

`Sample Size` = factor(paste("N =",c(10,30, 300))),

se = c(sd(samp_n10$sample_mean),

sd(samp_n30$sample_mean),

sd(samp_n300$sample_mean)),

mu = mu_prof,

ll = round(mu_prof - 1.96 *se,2),

ul = round(mu_prof + 1.96 *se,2),

ci = paste("95 % Coverage Interval [",ll,";",ul,"]",sep=""),

y = c(.3,.3,.45),

yend = y

)

sim_df <- samp_n10 %>%

bind_rows(samp_n30) %>%

bind_rows(samp_n300) %>%

mutate(

`Sample Size` = factor(paste("N =",size))

) %>%

left_join(ci_df) %>%

mutate(

Coverage = case_when(

sample_mean > ll_asymp & sample_mean < ul_asymp & size == 10~ "#F8766D",

sample_mean > ll_asymp & sample_mean < ul_asymp & size == 30~ "#00BA38",

sample_mean > ll_asymp & sample_mean < ul_asymp & size == 300~ "#619CFF",

T ~ "grey"

)

)

fig_se <- sim_df %>%

ggplot(aes(sample_mean, col = `Sample Size`))+

geom_density()+

geom_rug()+

geom_vline(xintercept = mu_prof, linetype = "dashed")+

theme_minimal()+

facet_wrap(~`Sample Size`, ncol=1)+

ylim(0,.5)+

guides(col="none")+

geom_segment(

data = se_df,

aes(x= ll, xend =ul, y = y, yend = yend)

)+

geom_text(

data = se_df,

aes(x = ul, y =y, label = SE),

hjust = -.25

) +

labs(

y = "",

x = "Sampling Distributions of Sample Means",

title = "Standard Errors decrease with Sample Size"

)

fig_coverage <- sim_df %>%

ggplot(aes(sample_mean,col=`Sample Size`))+

geom_density()+

geom_rug(col=sim_df$Coverage)+

geom_vline(xintercept = mu_prof, linetype = "dashed")+

theme_minimal()+

facet_wrap(~`Sample Size`, ncol=1)+

ylim(0,.55)+

guides(col="none")+

geom_segment(

data = ci_df,

aes(x= ll, xend =ul, y = y, yend = yend)

)+

geom_text(

data = ci_df,

aes(x = mu, y =y, label = ci),

hjust = .5,

nudge_y =.1

) +

labs(

y = "",

x = "Sampling Distributions of Sample Means",

title = "Approximately 95% of sample means are within 2 SE of the population mean"

)

How do we calculate a standard error from a single sample?

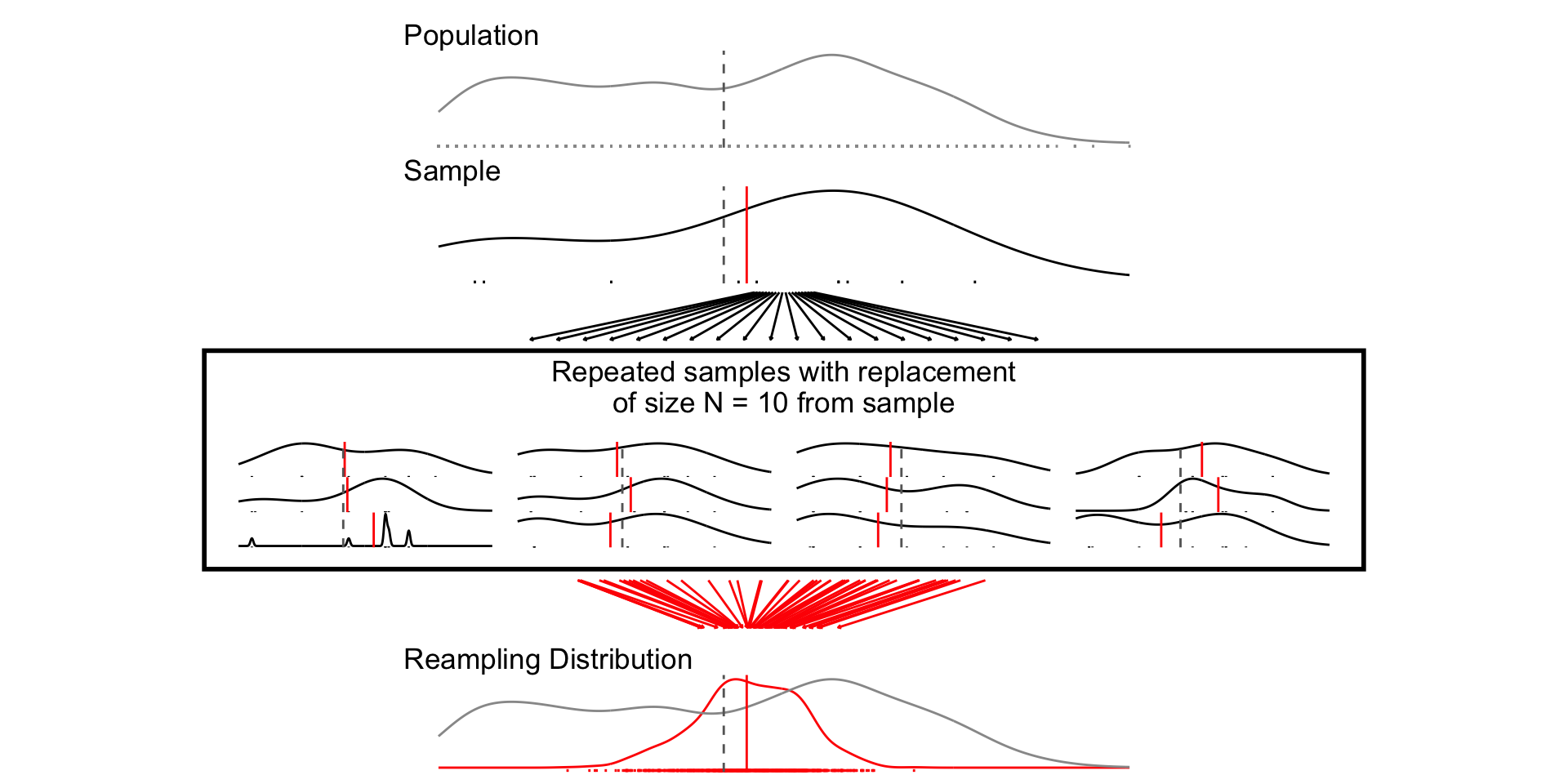

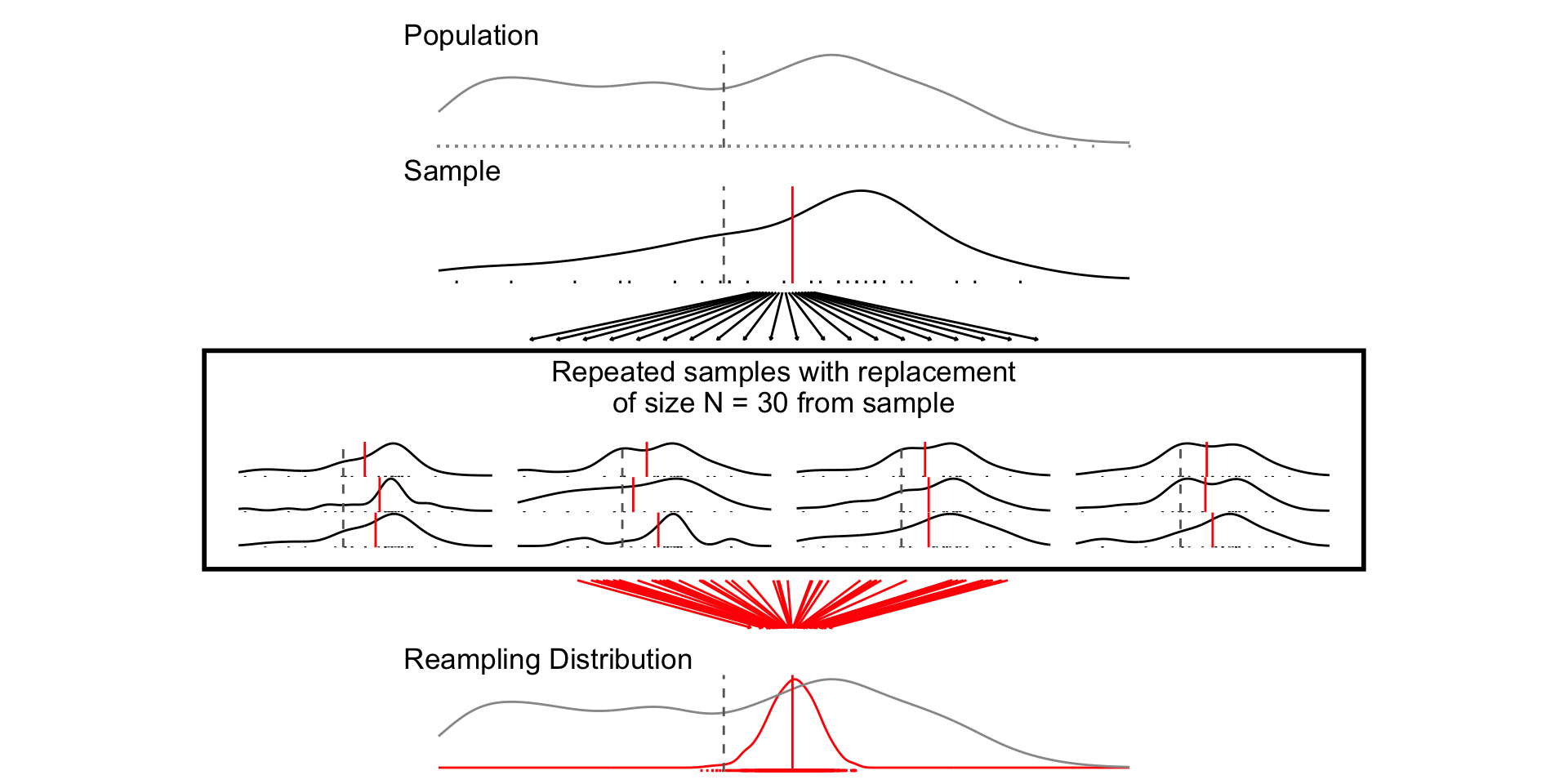

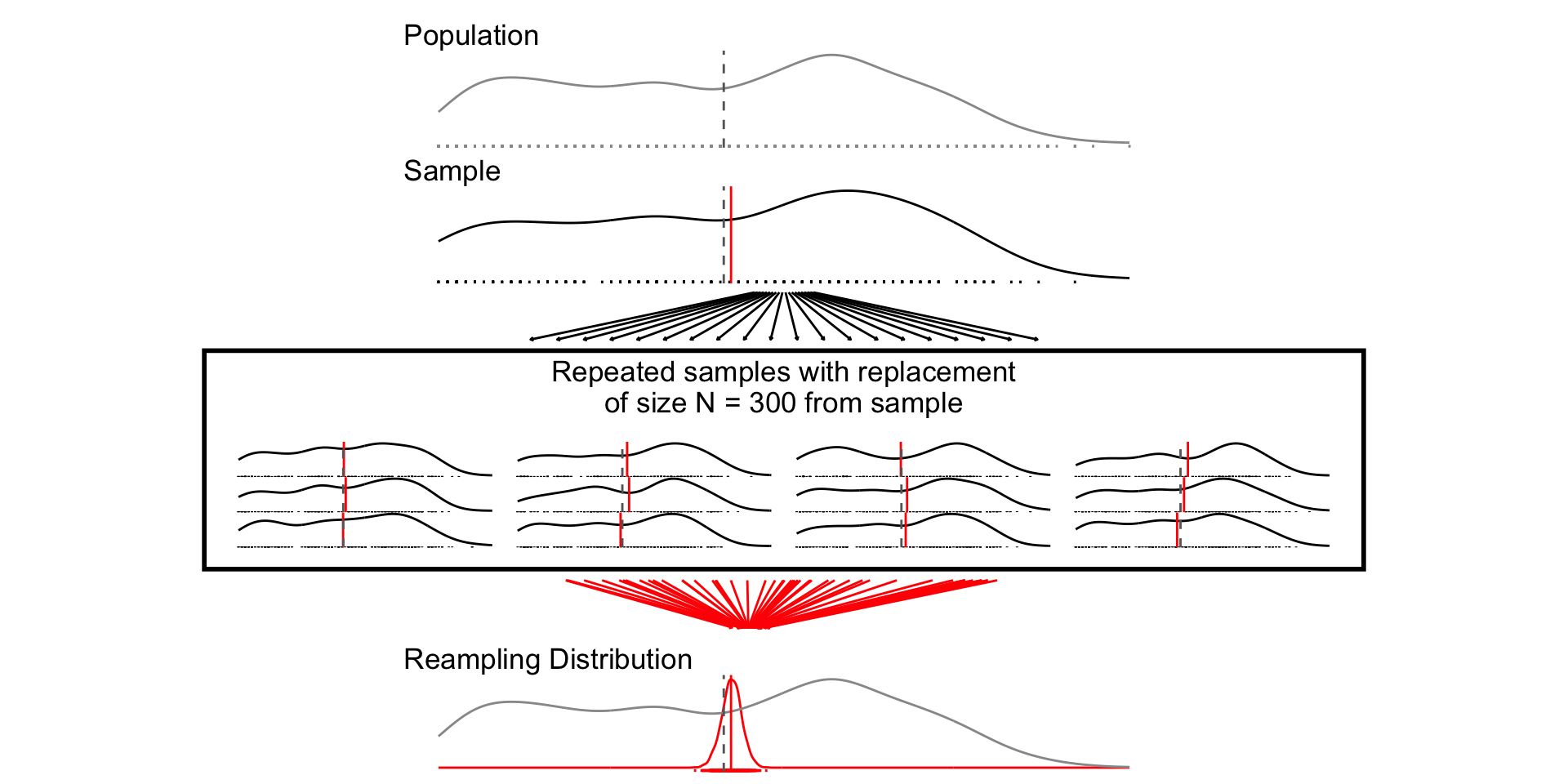

Calculating standard errors

- Simulation:

- Treat sample as population

- Sample with replacement (“bootstrapping”)

- Estimate SE from standard deviation of resampling distribution (“plug-in principle”)

- Analytic

- Characterize sampling distribution from sample mean and variance via asymptotic theory (the LLT and CLT)

- For a sample mean,

plot_resampling_fn <- function(d=df, v=age, sim=1000, size=10,rows=3){

# Population average

mu <- d %>% pull(!!enquo(v)) %>% mean(., na.rm=T)

# Population standard deviation and SE

sd <- d %>% pull(!!enquo(v)) %>% sd(., na.rm=T)

se <- sd/sqrt(size)

# Range

ll <- d %>% pull(!!enquo(v)) %>% as.numeric() %>% min(., na.rm=T)

ul <- d %>% pull(!!enquo(v)) %>% as.numeric() %>% max(., na.rm=T)

# Resampling with replace

# Draw 1 Sample

sample <- sample_data_fn(dat=d, var = !!enquo(v), samps = 1, sample_size = size, resample = F)

samp_df <- as.data.frame(sample$sample)

# Resample from sample with replacement

resamp_df <- sample_data_fn(dat=samp_df, var = !!enquo(v), samps = sim, sample_size = size, resample = T)

# Plot Population

p_pop <- d %>%

ggplot(aes(!!enquo(v)))+

geom_density(col ="grey60")+

geom_rug(col = "grey60", )+

geom_vline(xintercept = mu, col="grey40", linetype="dashed")+

theme_void()+

labs(title ="Population")+

xlim(ll,ul)+

theme(plot.title = element_text(hjust = 0))

p_samp <- plot_distribution(the_pop = d,

the_samp = samp_df,

the_var = age)+

labs(title ="Sample")+

xlim(ll,ul)+

theme(plot.title = element_text(hjust = 0))

p_samps <- plot_samples(pop=d, x= resamp_df,variable = !!enquo(v), n_rows =rows)

p_samps <- p_samps +

ggtitle(paste("Repeated samples with replacement\nof size N =",size,"from sample"))+

theme(plot.title = element_text(hjust = 0.5),

plot.background = element_rect(

fill = NA, colour = 'black', linewidth = 2)

)

# Resampling Distribution

p_dist <- resamp_df %>%

ggplot(aes(sample_mean))+

geom_density(col="red",aes(y= after_stat(ndensity)))+

geom_rug(col="red")+

geom_density(data = df, aes(!!enquo(v), y= after_stat(ndensity)),

col="grey60")+

geom_vline(xintercept = unique(resamp_df$pop_mean), col="red", linetype="solid")+

geom_vline(xintercept = mu, col="grey40", linetype="dashed")+

xlim(ll,ul)+

theme_void()+

labs(

title = "Reampling Distribution"

)+ theme(plot.title = element_text(hjust = 0))

range_upper_df <- tibble(

x = seq( ((ll+ul)/2 -5), ((ll+ul)/2 +5), length.out = 20),

xend = seq(ll-5, ul+5, length.out = 20),

y = rep(9, 20),

yend = rep(1, 20)

)

p_upper <- range_upper_df %>%

ggplot(aes(x=x, xend = xend, y=y,yend=yend))+

geom_segment(

arrow = arrow(length = unit(0.05, "npc"))

)+

theme_void()+

coord_fixed(ylim=c(0,10),

xlim =c(ll-5,ul+5),clip="off")

# Lower

range_df <- resamp_df %>%

summarise(

min = min(sample_mean),

max = max(sample_mean),

mean = mean(sample_mean)

)

plot_df <- tibble(

id = 1:50,

# x = sort(rnorm(50, mu, sd)),

x = sort(runif(50, ll, ul)),

xend = sort(rnorm(50, unique(resamp_df$pop_mean), se)),

y = 9,

yend = 1

)

p_lower <- plot_df %>%

ggplot(aes(x,y, group =id))+

geom_segment(aes(xend=xend, yend=yend),

col = "red",arrow = arrow(length = unit(0.05, "npc"))

)+

theme_void()+

coord_fixed(ylim=c(0,10),xlim = c(ll,ul),clip="off")

design <-"##AAAA##

##AAAA##

##AAAA##

##BBBB##

##BBBB##

##BBBB##

CCCCCCCC

CCCCCCCC

#DDDDDD#

#DDDDDD#

#DDDDDD#

#DDDDDD#

EEEEEEEE

EEEEEEEE

##FFFF##

##FFFF##

##FFFF##"

fig <- p_pop / p_samp /p_upper / p_samps / p_lower / p_dist +

plot_layout(design = design)

return(fig)

}

set.seed(123)

resamp_n10 <- sample_data_fn(

dat = sample_data_fn(samps = 1, sample_size = 10, resample = T)$sample %>% as.data.frame(),

sample_size = 10,

resample = T)

set.seed(123)

fig_n10_bs <- plot_resampling_fn(size=10)

set.seed(12345)

resamp_n30 <- sample_data_fn(

dat = sample_data_fn(samps = 1, sample_size = 30, resample = T)$sample %>% as.data.frame(),

samps = 1000, sample_size = 30, resample = T)

set.seed(12345)

fig_n30_bs <- plot_resampling_fn(size=30)

set.seed(1234)

resamp_n300 <- sample_data_fn(

dat = sample_data_fn(samps = 1, sample_size = 300, resample = T)$sample %>% as.data.frame(),

samps = 1000, sample_size = 300, resample = T)

set.seed(1234)

fig_n300_bs <- plot_resampling_fn(size=300)

| Bootstrap SE | Analytic SE |

|---|---|

| 9.85 | 8.82 |

| 5.79 | 5.09 |

| 1.85 | 1.61 |

Confidence intervals

Confidence intervals:

provide a way of quantifying uncertainty about estimates

describe a range of plausible values for an estimate

are a function of the standard error of the estimate, and the a critical value determined by

Calculating a confidence interval

Choose level of confidence

Derive the sampling distribution of the estimator

- Simulation: bootstrap re-sampling

- Analytically: computing its mean and variance.

Compute the standard error

Compute the critical value

- as the

- as the

Compute the lower and upper confidence limits

- lower limit =

- upper limit =

- lower limit =

resamp_df <-

resamp_n10 %>%

bind_rows(resamp_n30) %>%

bind_rows(resamp_n300) %>%

mutate(

`Sample Size` = factor(paste("N =",size))

)

resamp_ci_df <- tibble(

`Sample Size` = factor(paste("N =",c(10,30,300))),

mu = unique(resamp_df$pop_mean),

ll = unique(resamp_df$ll_asymp),

ul = unique(resamp_df$ul_asymp),

y = c(.3, .3,.5)

)

fig_ci1 <- resamp_df %>%

ggplot(aes(sample_mean,

col = `Sample Size`))+

geom_density()+

geom_rug()+

geom_vline(xintercept = mu_prof, linetype = "dashed")+

geom_vline(data = resamp_ci_df,

aes(xintercept = mu,

col = `Sample Size`))+

geom_segment(data = resamp_ci_df,

aes(x = ll, xend =ul, y = y, yend =y,

col = `Sample Size`))+

facet_wrap(~`Sample Size`, ncol=1)+

theme_minimal()+

labs(

y = "",

x = "Resampling Distribution",

title = "95% Confidence Intervals"

)

samp_ci_df <- samp_n10 %>%

bind_rows(samp_n30) %>%

bind_rows(samp_n300) %>%

mutate(

`Sample Size` = factor(paste("N =",size))

) %>%

mutate(

Coverage = case_when(

pop_mean > ll & pop_mean < ul ~ "red",

T ~ "black"

)

)

fig_ci2 <- samp_ci_df %>%

filter(sim %in% 1:100) %>%

filter(size == 10) %>%

ggplot(aes(y = sample_mean, x= sim))+

geom_pointrange(aes(ymin = ll, ymax =ul, col=Coverage))+

geom_hline(yintercept = mu_prof, linetype = "dashed")+

coord_flip()+

theme_minimal()+

guides(col = "none")+

facet_wrap(~`Sample Size`)

fig_ci3 <- samp_ci_df %>%

filter(sim %in% 1:100) %>%

ggplot(aes(y = sample_mean, x= sim))+

geom_pointrange(aes(ymin = ll, ymax =ul, col=Coverage))+

geom_hline(yintercept = mu_prof, linetype = "dashed")+

coord_flip()+

theme_minimal()+

guides(col = "none")+

facet_wrap(~`Sample Size`)

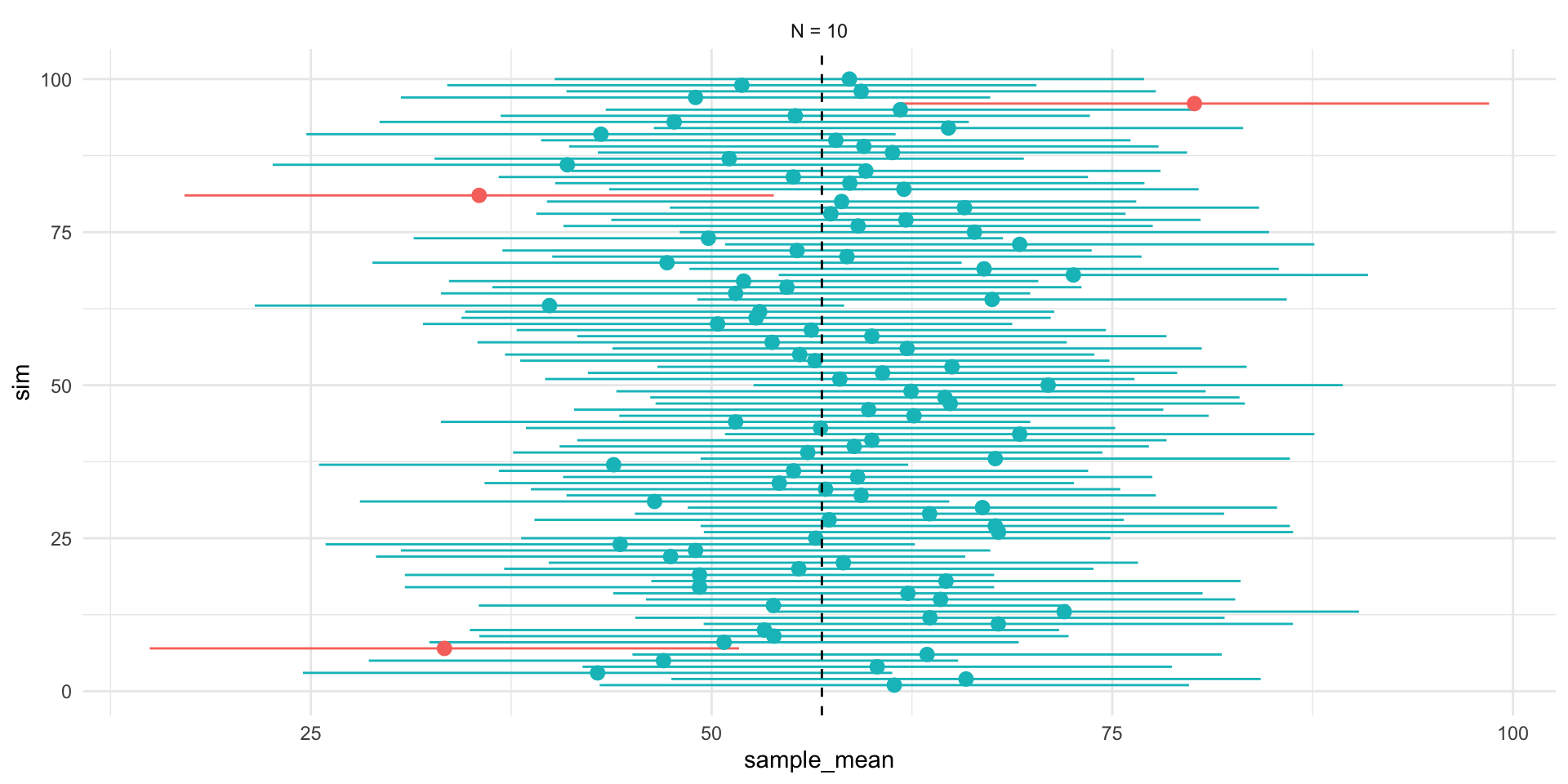

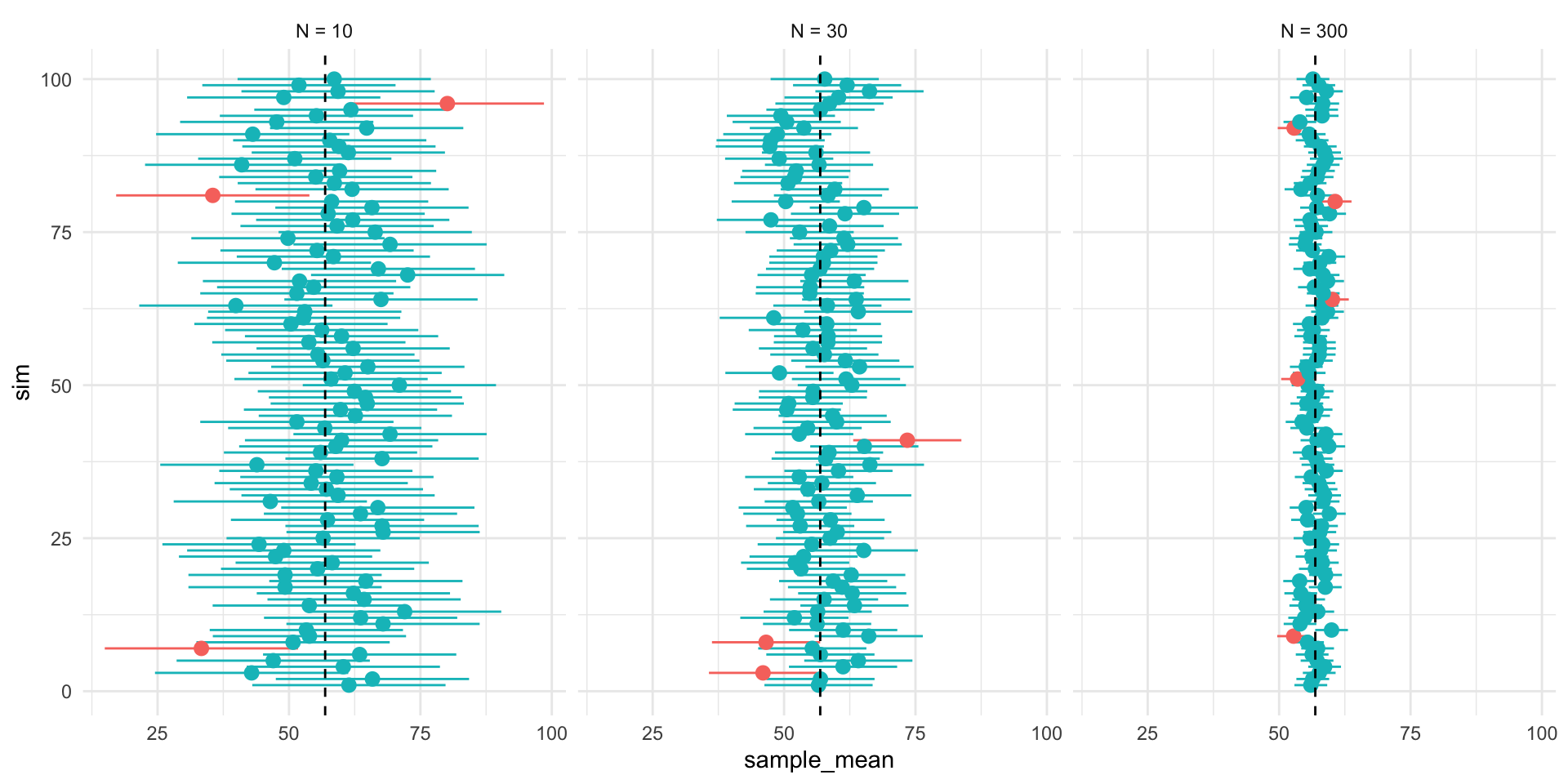

Figure 1 shows 3 confidences intervals for 3 samples of different sizes (N = 10, 30, 300). The CIs for N = 10 and N = 300, intervals contain the truth (include the population mean). By chance, the CI for N=30 falls outside of the truth.

Figure 2 shows that our confidence is about the property of the interval. Over repeated sampling, 95% of the intervals would contain the truth, 5% percent would not.

- In any one sample, the population parameter either is or is not within the interval.

Figure 3, shows that while the width of the interval declines with the sample size, the coverage properties remains the same.

Interpreting confidence intervals

Confidence intervals give a range of values that are likely to include the true value of the parameter

Our “confidence” is about the interval

In repeated sampling, we expect that

For any one interval, the truth,

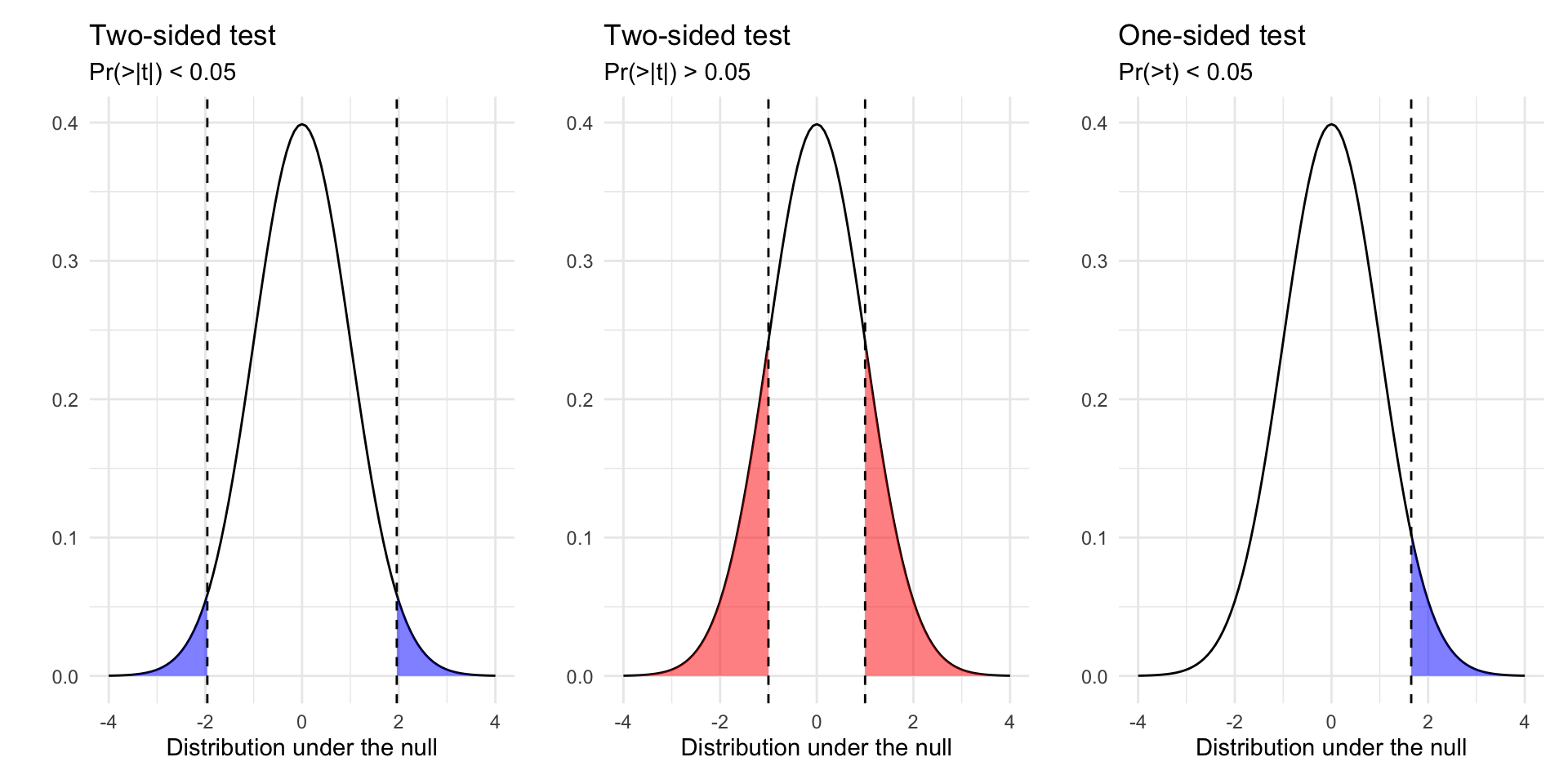

Hypothesis testing

What is a hypothesis test

A formal way of assessing statistical evidence. Combines

Deductive reasoning distribution of a test statistic, if the a null hypothesis were true

Inductive reasoning based on the test statistic we observed, how likely is it that we would observe it if the null were true?

What is a test statistic?

- A way of summarizing data

- difference of means

- coefficients from a linear model

- coefficients from a linear model divided by their standard errors

- R^2

- Sums of ranks

Note

Different test statistics may be more or less appropriate depending on your data and questions.

What is a null hypothesis?

A statement about the world

Only interesting if we reject it

Would yield a distribution of test statistics under the null

Typically something like “X has no effect on Y” (Null = no effect)

Never accept the null can only reject

What is a p-value?

A p-value is a conditional probability summarizing the likelihood of observing a test statistic as far from our hypothesis or farther, if our hypothesis were true.

How do we do hypothesis testing?

Posit a hypothesis (e.g.

Calculate the test statistic (e.g.

Derive the distribution of the test statistic under the null via simulation or asymptotic theory

Compare the test statistic to the distribution under the null

Calculate p-value (Two Sided vs One sided tests)

Reject or fail to reject/retain our hypothesis based on some threshold of statistical significance (e.g. p < 0.05)

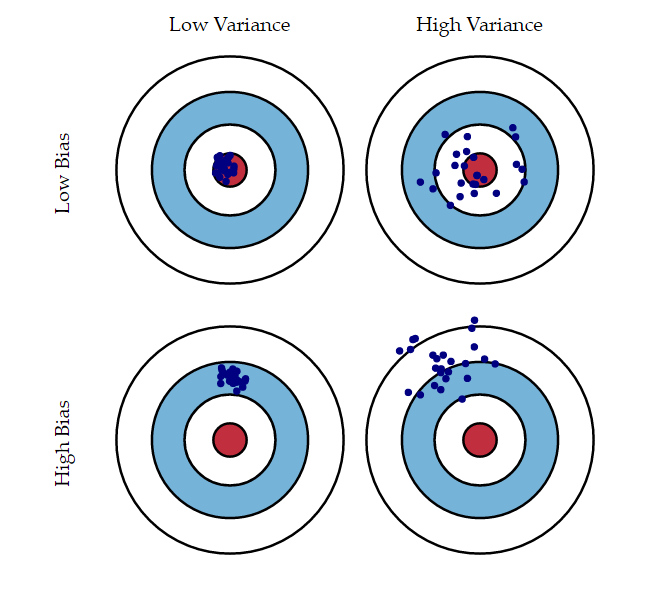

Outcomes of hypothesis tests

Two conclusions from of a hypothesis test: we can reject or fail to reject a hypothesis test.

We never “accept” a hypothesis, since there are, in theory, an infinite number of other hypotheses we could have tested.

Our decision can produce four outcomes and two types of error:

| Reject |

Fail to Reject |

|

|---|---|---|

| False Positive | Correct! | |

| Correct! | False Negative |

- Type 1 Errors: False Positive Rate (p < 0.05)

- Type 2 Errors: False negative rate (1 - Power of test)

Quantifying uncertainty in regression

Quantifying uncertainty in regression

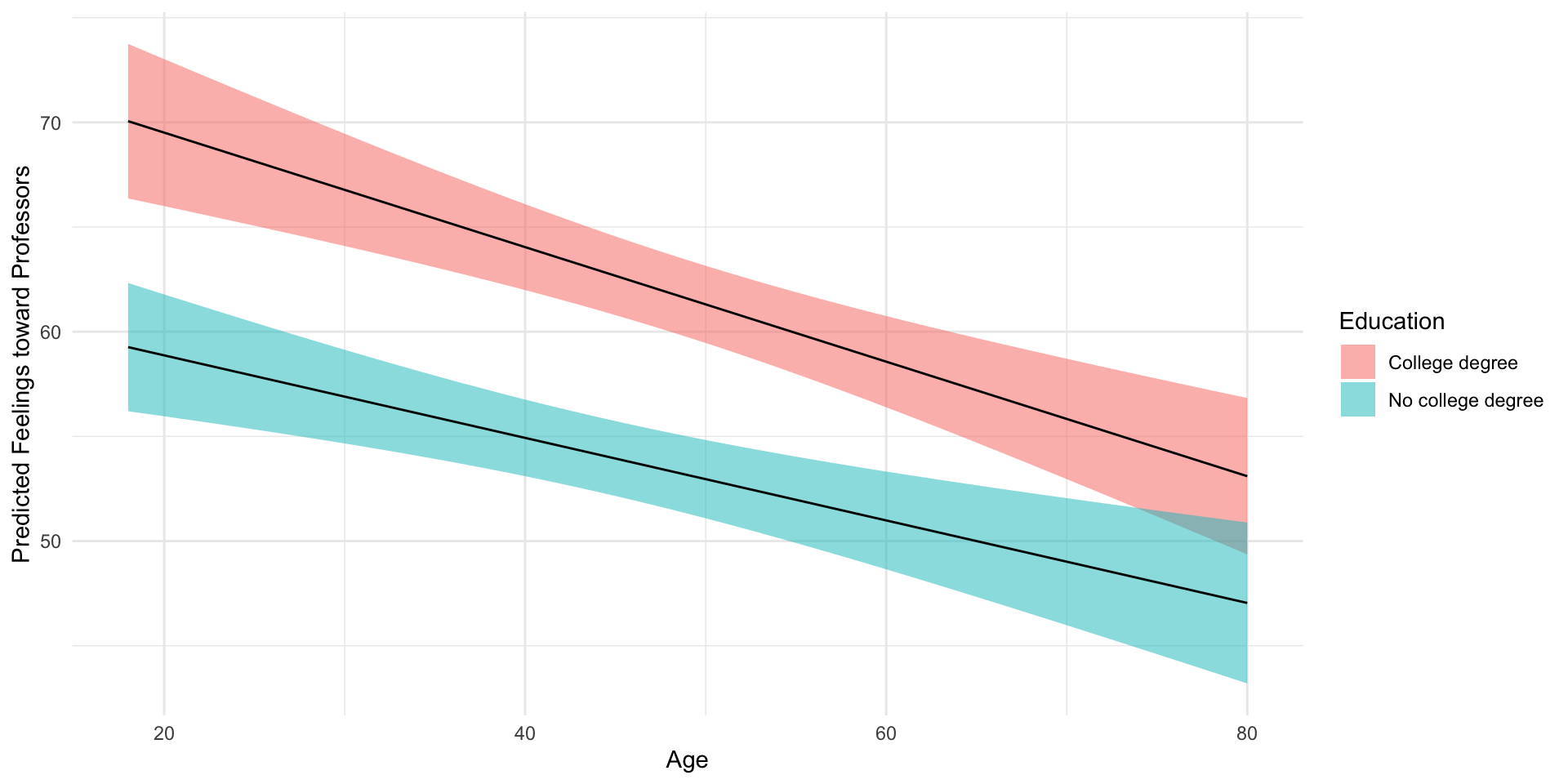

How education condition the relationship between age and feelings toward professors?

Let’s fit the following “interaction” model to assess whether education moderates the relationship between age and evaluations

And unpack the output

term estimate std.error statistic p.value conf.low

1 (Intercept) 62.812 2.326 27.001 0.000 58.249

2 age -0.197 0.048 -4.069 0.000 -0.292

3 has_degreeCollege degree 12.168 3.602 3.378 0.001 5.103

4 age:has_degreeCollege degree -0.076 0.072 -1.064 0.288 -0.217

conf.high df outcome

1 67.375 1689 ft_professors

2 -0.102 1689 ft_professors

3 19.234 1689 ft_professors

4 0.064 1689 ft_professors| Model 1 | |

|---|---|

| (Intercept) | 62.81*** |

| (2.33) | |

| age | -0.20*** |

| (0.05) | |

| has_degreeCollege degree | 12.17*** |

| (3.60) | |

| age:has_degreeCollege degree | -0.08 |

| (0.07) | |

| R2 | 0.04 |

| Adj. R2 | 0.04 |

| Num. obs. | 1693 |

| RMSE | 27.35 |

| ***p < 0.001; **p < 0.01; *p < 0.05 | |

| Model 1 | |

|---|---|

| (Intercept) | 62.81* |

| [58.25; 67.37] | |

| age | -0.20* |

| [-0.29; -0.10] | |

| has_degreeCollege degree | 12.17* |

| [ 5.10; 19.23] | |

| age:has_degreeCollege degree | -0.08 |

| [-0.22; 0.06] | |

| R2 | 0.04 |

| Adj. R2 | 0.04 |

| Num. obs. | 1693 |

| RMSE | 27.35 |

| * 0 outside the confidence interval. | |

pred_df <- expand_grid(

has_degree = c("College degree", "No college degree"),

age = 18:80

)

pred_df <- cbind(pred_df,

predict(m1, newdata = pred_df, interval = "confidence")$fit

)

m1_plot <- pred_df %>%

ggplot(aes(age, fit, fill = has_degree))+

geom_ribbon(aes(ymin=lwr, ymax=upr),alpha=0.5)+

geom_line() +

labs(

fill = "Education",

x = "Age",

y = "Predicted Feelings toward Professors"

)+

theme_minimal()

The relationship between age and feelings toward professors does not appear to vary with based on whether you have a college degree or not.

- The coefficient on the interaction term is non-significant

- It’s p-value is

- The 95% confidence interval is [-0.22; 0.06]

- Both suggesting 0 (no evidence of moderation) is plausible claim given the data

- It’s p-value is

- We this in the plot, where we find clear evidence of the main effects of each variable (negative slope for age, big differences between college and no college), but little evidence of an interaction (similar slopes)

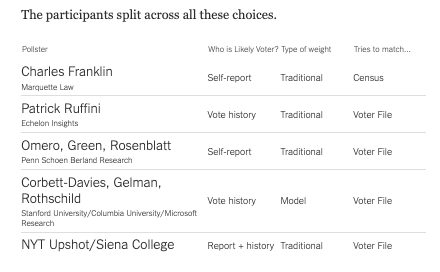

How polling works

What’s a survey

A survey is a structured interview designed to generate data

Survey’s are conducted on samples from a population

- Draw inferences about the population based on estimates from our sample

The theory of polling depends on the power of random sampling

The practice of polling tries to account and adjust for all the ways a poll can fall short of this theoretical ideal

Key Features of an (Election) Survey

Pollster: Who’s doing the survey

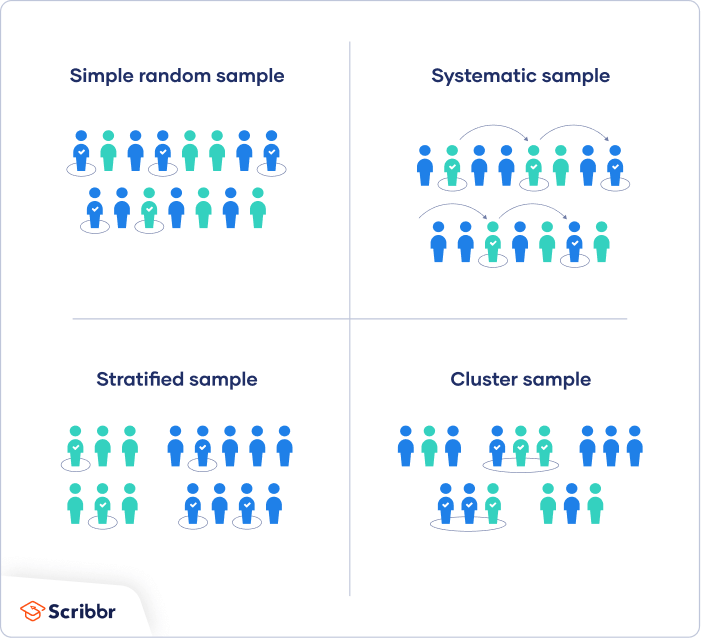

Sampling frame: A list from which the sample was drawn (e.g. a voter file)

Sampling Procedure: How people are selected from the sampling frame to participate in survey

Sample size: How many people were surveyed

Survey mode: How the survey was conducted

Survey instrument: What the survey asked

Survey weights: Adjustments to make the survey more representative of the population

Likely voter model: A way of distinguishing (likely) voters from non-voters

Margin of error: A range of plausible values for the true population value

Probability based sampling (Why surveys work?)

How polls can be wrong?

Error and Bias

Polling Error

Total Survey Error in election polling is a function of:

Sampling Error

Temporal Error

Non-Sampling Error

Polling Error

Total Survey Error in election polling is a function of:

- Sampling Error:

That error that arises from sampling from a population

Sample Size

Margins of error typically only reflect sampling error

Polling Error

Total Survey Error in election polling is a function of:

Sampling Error:

Temporal Error:

The error that comes from polling a dynamic race at specific point in time

Polls closer to the election

Polling Error

Total Survey Error in election polling is a function of:

Sampling Error:

Temporal Error:

Non-sampling Error:

- Errors that arise from how a poll is implemented and analyzed

- Coverage error: Sampling Frame

- Response bias: Some people are more less likely to take a poll

- Measurement bias: Question wording, order, can influence responses

- Processing and adjustment error: Failing to weight for key demographics

- And more…

- Coverage error: Sampling Frame

- Errors that arise from how a poll is implemented and analyzed

Polling is Hard

Polling is hard

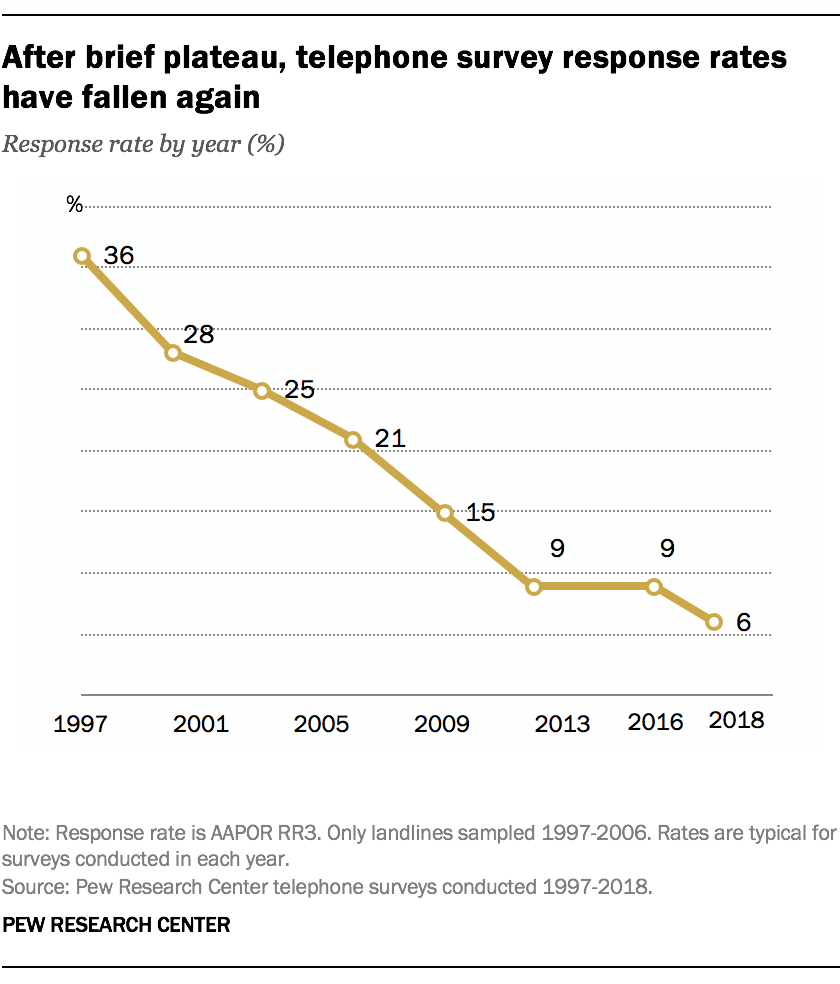

Response rates are low

Response rates differ

Adjustments are imperfect and uncertain

Polling for elections is particularly hard

- The population of interest is unknown (voters) and changing

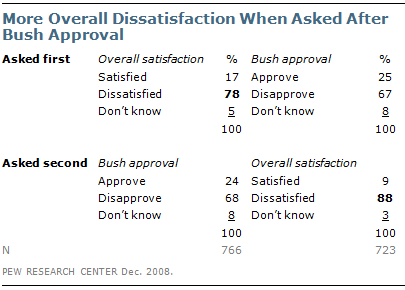

Question wording effects

Would you:

- favor or oppose taking military action in Iraq to end Saddam Hussein’s rule

- 68% favor, 25% oppose

- favor or oppose taking military action in Iraq to end Saddam Hussein’s rule even if it meant that U.S. forces might suffer thousands of casualties

- 43% favor, 48% oppose

Source: Pew

Question order effects

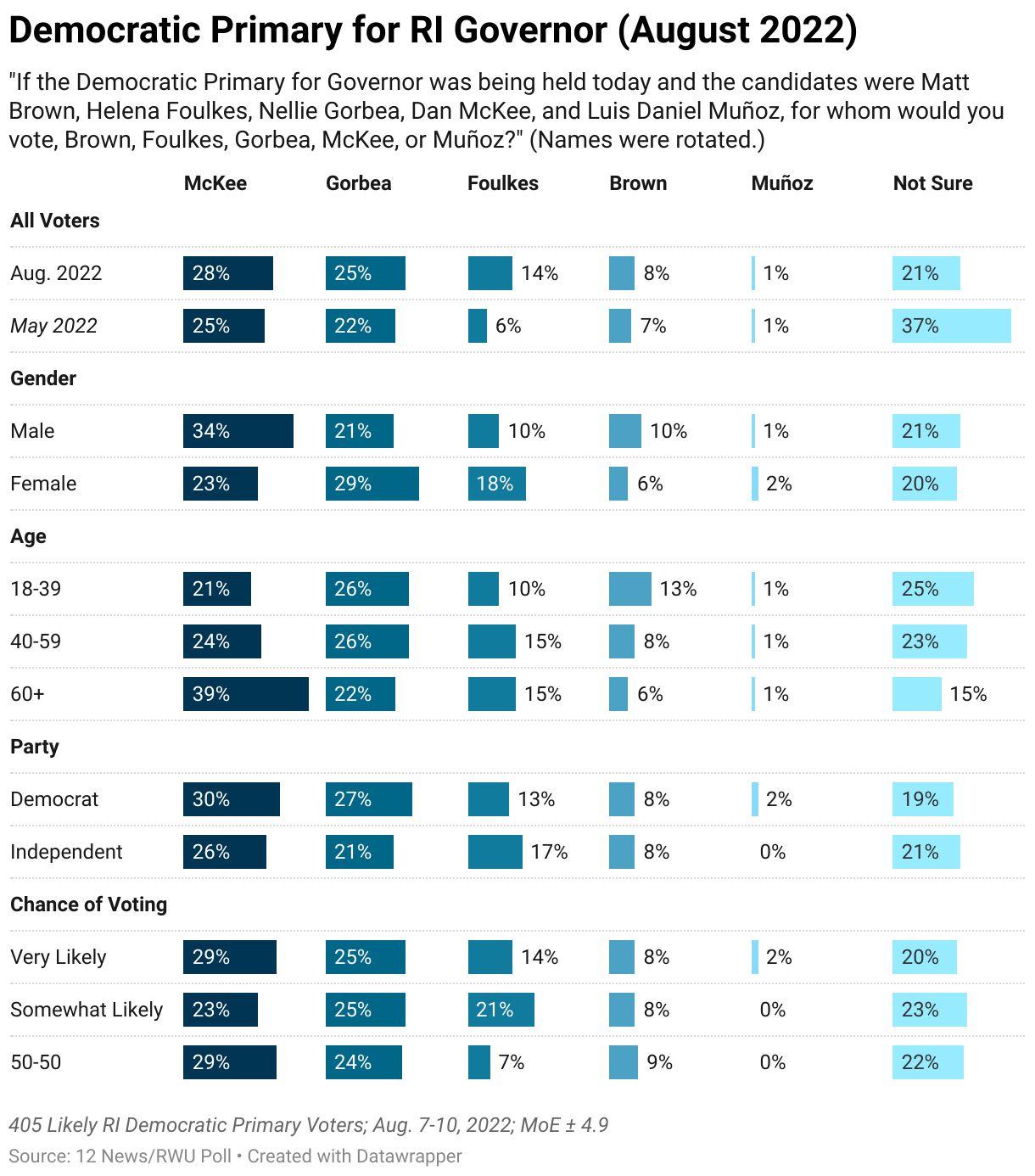

Election Polling: Example

12 News/Roger Williams University Poll – August 2022

- Pollster: Fleming & Associates

- Sampling frame: Probability sample of registered voters, Aug 7-10, 2022

- Sample size: 405

- Survey mode: Live caller with land lines and cell phone

- Survey Instrument: See cross tabs of the questions here Questions

- Survey weights: None that I can tell

- Likely Voter Model: Hard to say, but based on past surveys probably two-part screener:

- Are you registered to vote?

- How likely are you to vote in the Democratic Primary?

- Margin of Error:

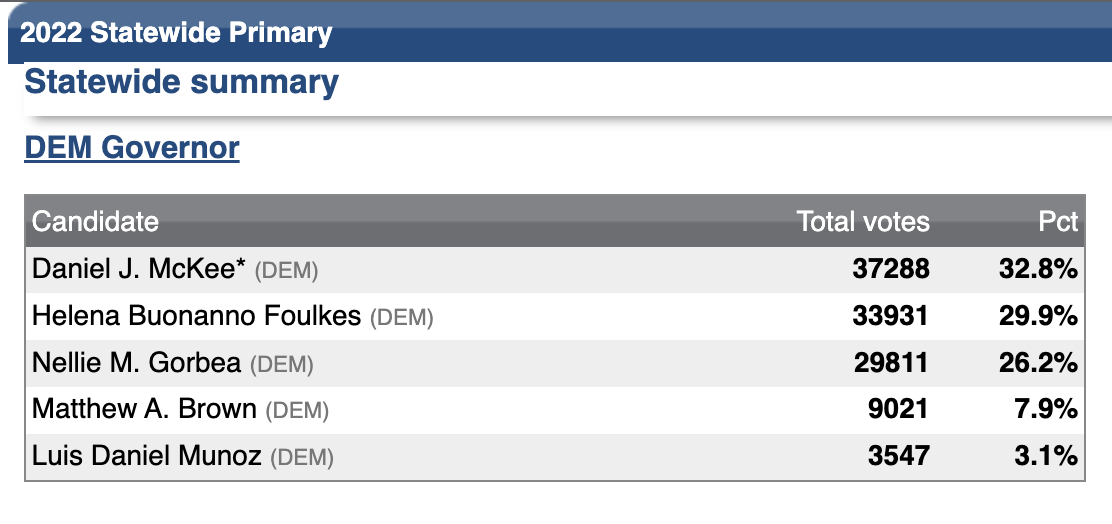

Evaluating the Performance of a Single Poll

Two criteria

- Did the poll call the race correctly?

- Yes! McKee won

- Did the poll get the margin right?

- Not exactly…

- McKee won by about 3% percentage points over Foulkes, not Gorbea

Statistics and POLS 1140 Part III

Causal claims involve counterfactual comparisons

Causal claims imply claims about counterfactuals

What would have happened if we were to change some aspect of the world?

We can represent counterfactuals in terms of potential outcomes

Individual Causal Effects

Let

For any individual, we can imagine different potential outcomes:

The fundamental problem of causal inference is that individual causal effects are unknowable because we only observe one of many potential outcomes

- A problem of missing data

A statistical solution to the FPoCI

Rather than focus individual causal effects:

We focus on average causal effects (Average Treatment Effects [ATEs]):

When does the difference of averages provide us with a good estimate of the average difference?

Let’s consider a simple example

Does eating chocolate make you happy?

Potential Outcomes:

| 7 | 3 | 4 |

| 8 | 6 | 2 |

| 5 | 4 | 1 |

| 4 | 3 | 1 |

| 6 | 10 | -4 |

| 8 | 9 | -1 |

| 5 | 4 | 1 |

| 7 | 8 | -1 |

| 4 | 3 | 1 |

| 6 | 0 | 6 |

| 6 | 5 | 1 |

If we could observe everyone’s potential outcomes, we could calculate the ICE

On average eating chocolate increases happiness by 1 point on our 10-point scale (ATE = 1)

Suppose we conducted a study and let folks select what they wanted to eat.

Potential Outcomes:

| 7 | 3 | 4 |

| 8 | 6 | 2 |

| 5 | 4 | 1 |

| 4 | 3 | 1 |

| 6 | 10 | -4 |

| 8 | 9 | -1 |

| 5 | 4 | 1 |

| 7 | 8 | -1 |

| 4 | 3 | 1 |

| 6 | 0 | 6 |

| 6 | 5 | 1 |

Observed Treatment:

| chocolate | 1 | 7 |

| chocolate | 1 | 8 |

| chocolate | 1 | 5 |

| chocolate | 1 | 4 |

| fruit | 0 | 10 |

| fruit | 0 | 9 |

| chocolate | 1 | 5 |

| fruit | 0 | 8 |

| chocolate | 1 | 4 |

| chocolate | 1 | 6 |

| 5.57 | 9 | -3.43 |

Observed Treatment:

| chocolate | 1 | 7 |

| chocolate | 1 | 8 |

| chocolate | 1 | 5 |

| chocolate | 1 | 4 |

| fruit | 0 | 10 |

| fruit | 0 | 9 |

| chocolate | 1 | 5 |

| fruit | 0 | 8 |

| chocolate | 1 | 4 |

| chocolate | 1 | 6 |

| 5.57 | 9 | -3.43 |

Selection Bias

Our estimate of the ATE is biased by the fact that folks who prefer fruit seem to be happier than folks who prefer chocolate in this example

In general, selection bias occurs when folks who receive the treatment differ systematically from folks who don’t

What if instead of letting people pick and choose, we randomly assigned half our respondents to chocolate and half to receive fruit

Potential Outcomes:

| 7 | 3 | 4 |

| 8 | 6 | 2 |

| 5 | 4 | 1 |

| 4 | 3 | 1 |

| 6 | 10 | -4 |

| 8 | 9 | -1 |

| 5 | 4 | 1 |

| 7 | 8 | -1 |

| 4 | 3 | 1 |

| 6 | 0 | 6 |

| 6 | 5 | 1 |

Randomly Assigned Treatment:

| chocolate | 1 | 7 |

| chocolate | 1 | 8 |

| chocolate | 0 | 4 |

| chocolate | 1 | 4 |

| fruit | 0 | 10 |

| fruit | 1 | 8 |

| chocolate | 0 | 4 |

| fruit | 0 | 8 |

| chocolate | 1 | 4 |

| chocolate | 0 | 0 |

| 6.2 | 5.2 | 1 |

Randomly Assigned Treatment:

| chocolate | 1 | 7 |

| chocolate | 1 | 8 |

| chocolate | 0 | 4 |

| chocolate | 1 | 4 |

| fruit | 0 | 10 |

| fruit | 1 | 8 |

| chocolate | 0 | 4 |

| fruit | 0 | 8 |

| chocolate | 1 | 4 |

| chocolate | 0 | 0 |

| 6.2 | 5.2 | 1 |

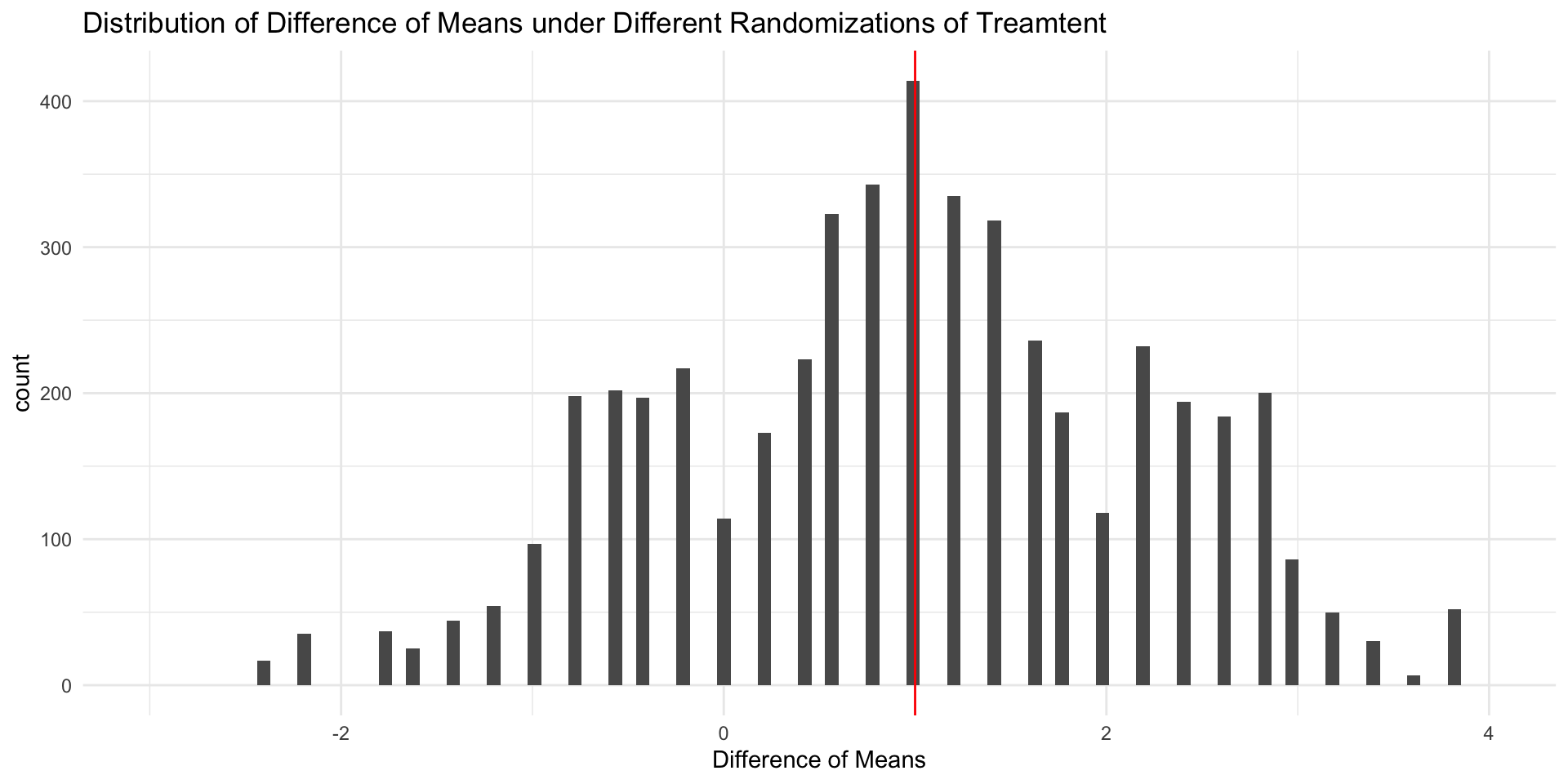

Random Assignment

When treatment has been randomly assigned, a difference in sample means provides an unbiased estimate of the ATE

The fact that our

If we randomly assigned treatment a different way, we’d get a different estimate.

In general unbiased estimators will tend to be neither too high nor too low (e.g.

Estimating an Average Treatment Effect

If we treatment has been randomly assigned, we can estimate the ATE by taking the difference of means between treatment and control:

That is, the ATE is causally identified by the difference of means estimator in an experimental design

Random Assignment 1

| chocolate | 1 | 7 |

| chocolate | 1 | 8 |

| chocolate | 0 | 4 |

| chocolate | 1 | 4 |

| fruit | 0 | 10 |

| fruit | 1 | 8 |

| chocolate | 0 | 4 |

| fruit | 0 | 8 |

| chocolate | 1 | 4 |

| chocolate | 0 | 0 |

| 6.2 | 5.2 | 1 |

Random Assignment 2

| chocolate | 0 | 3 |

| chocolate | 1 | 8 |

| chocolate | 0 | 4 |

| chocolate | 1 | 4 |

| fruit | 1 | 6 |

| fruit | 1 | 8 |

| chocolate | 0 | 4 |

| fruit | 1 | 7 |

| chocolate | 0 | 3 |

| chocolate | 0 | 0 |

| 6.6 | 2.8 | 3.8 |

Random Assignment 3

| chocolate | 1 | 7 |

| chocolate | 0 | 6 |

| chocolate | 1 | 5 |

| chocolate | 1 | 4 |

| fruit | 0 | 10 |

| fruit | 0 | 9 |

| chocolate | 0 | 4 |

| fruit | 1 | 7 |

| chocolate | 1 | 4 |

| chocolate | 0 | 0 |

| 5.4 | 5.8 | -0.4 |

Distribution of Sample ATEs

Observational vs Experimental Designs

Experimental designs are studies in which a causal variable of interest, the treatement, is manipulated by the researcher to examine its causal effects on some outcome of interest

Observational designs are studies in which a causal variable of interest is determined by someone/thing other than the researcher (nature, governments, people, etc.)

Two Kinds of Bias

Confounder bias: Failing to control for a common cause of

DandY(aka Omitted Variable Bias)Collider bias: Controlling for a common consequence

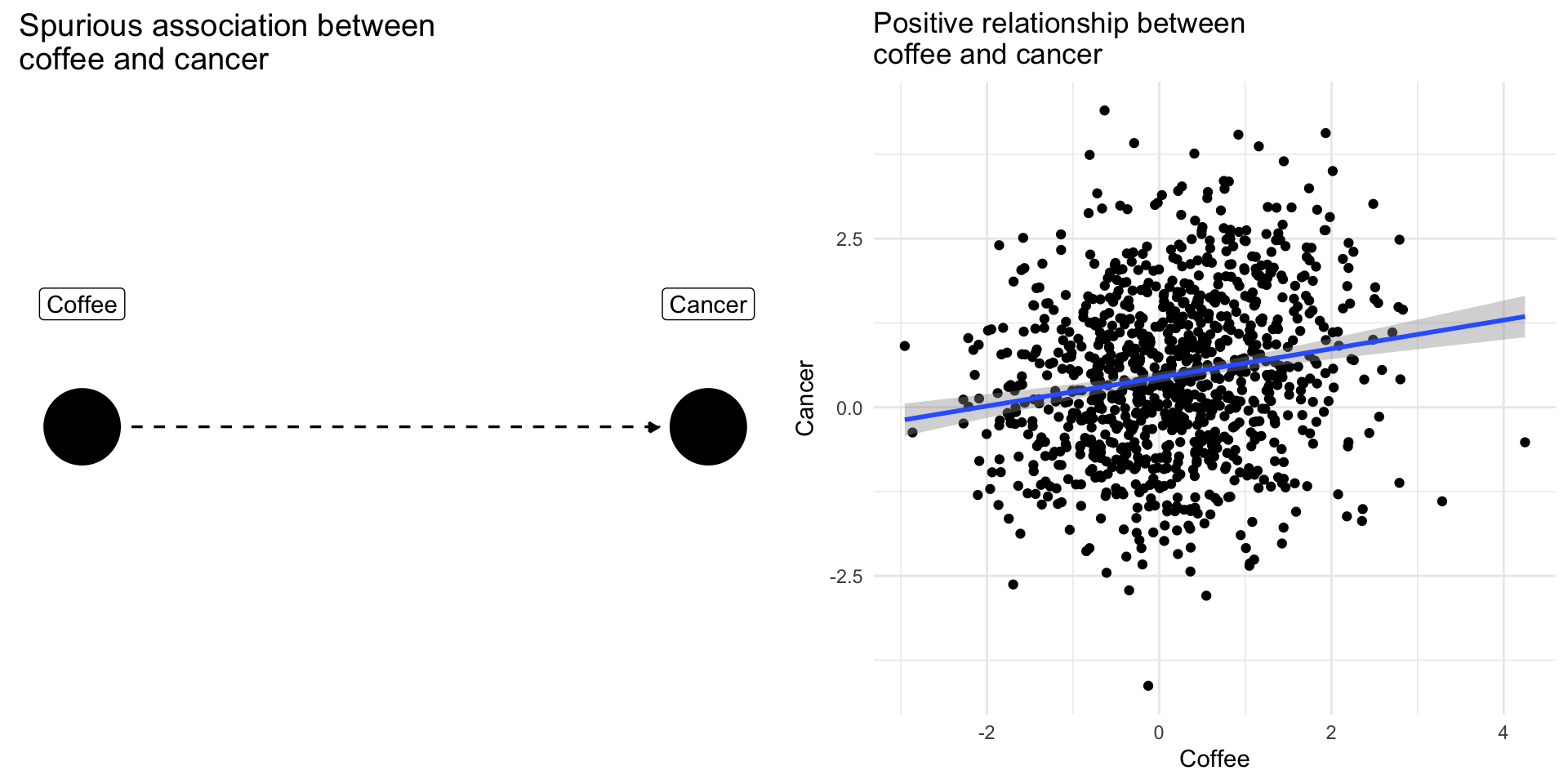

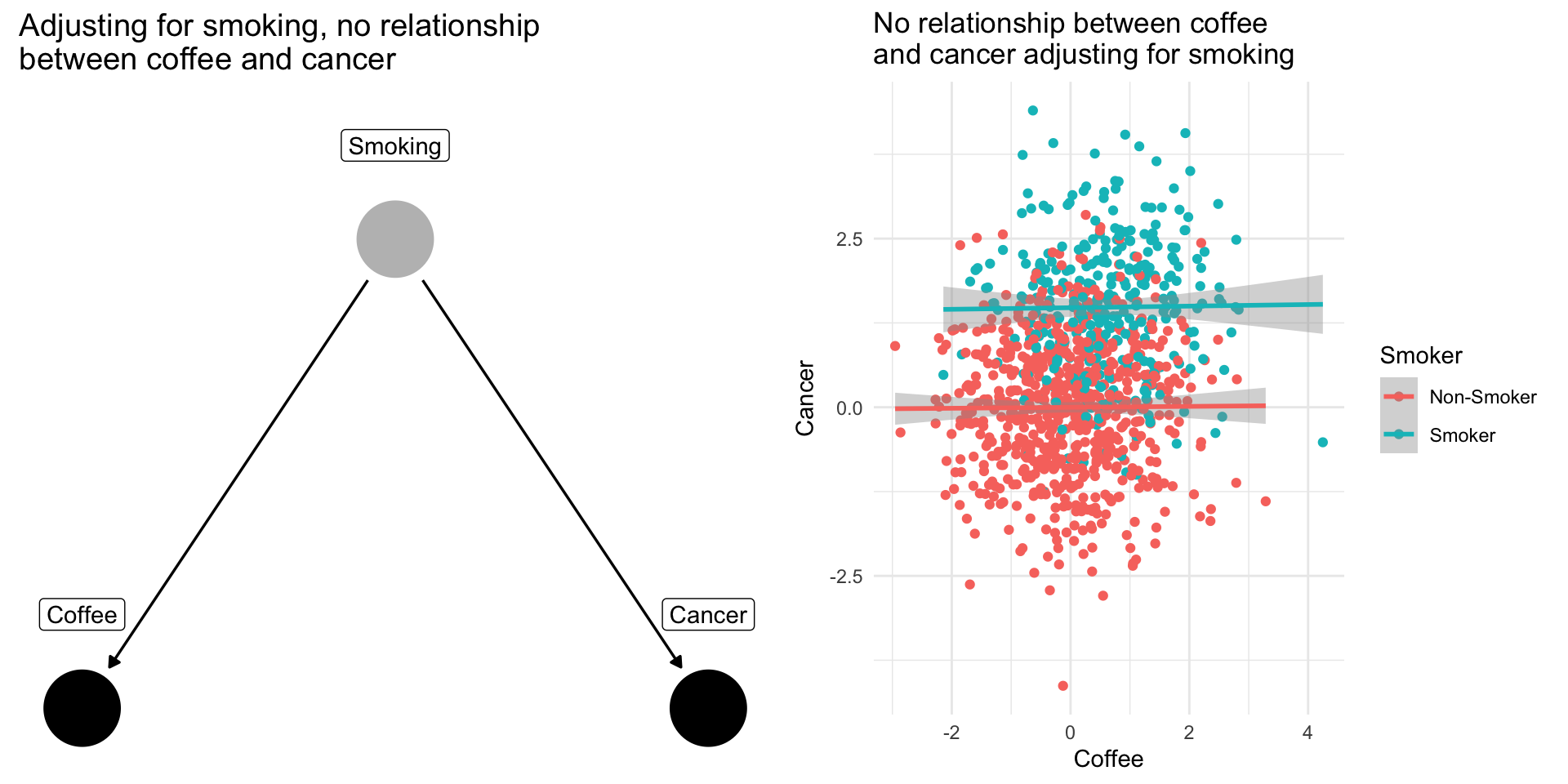

Confounding Bias: The Coffee Example

Drinking coffee doesn’t cause lung cancer we might find correlation between them because they share a common cause: smoking.

Smoking is a confounding variable, that if omitted will bias our results producing a spurious relationsip

Adjusting for confounders removes this source of bias

Note

When scholars include “control variables” in a regression, often they are trying to adjust for confounding variables that if omitted would bias their results

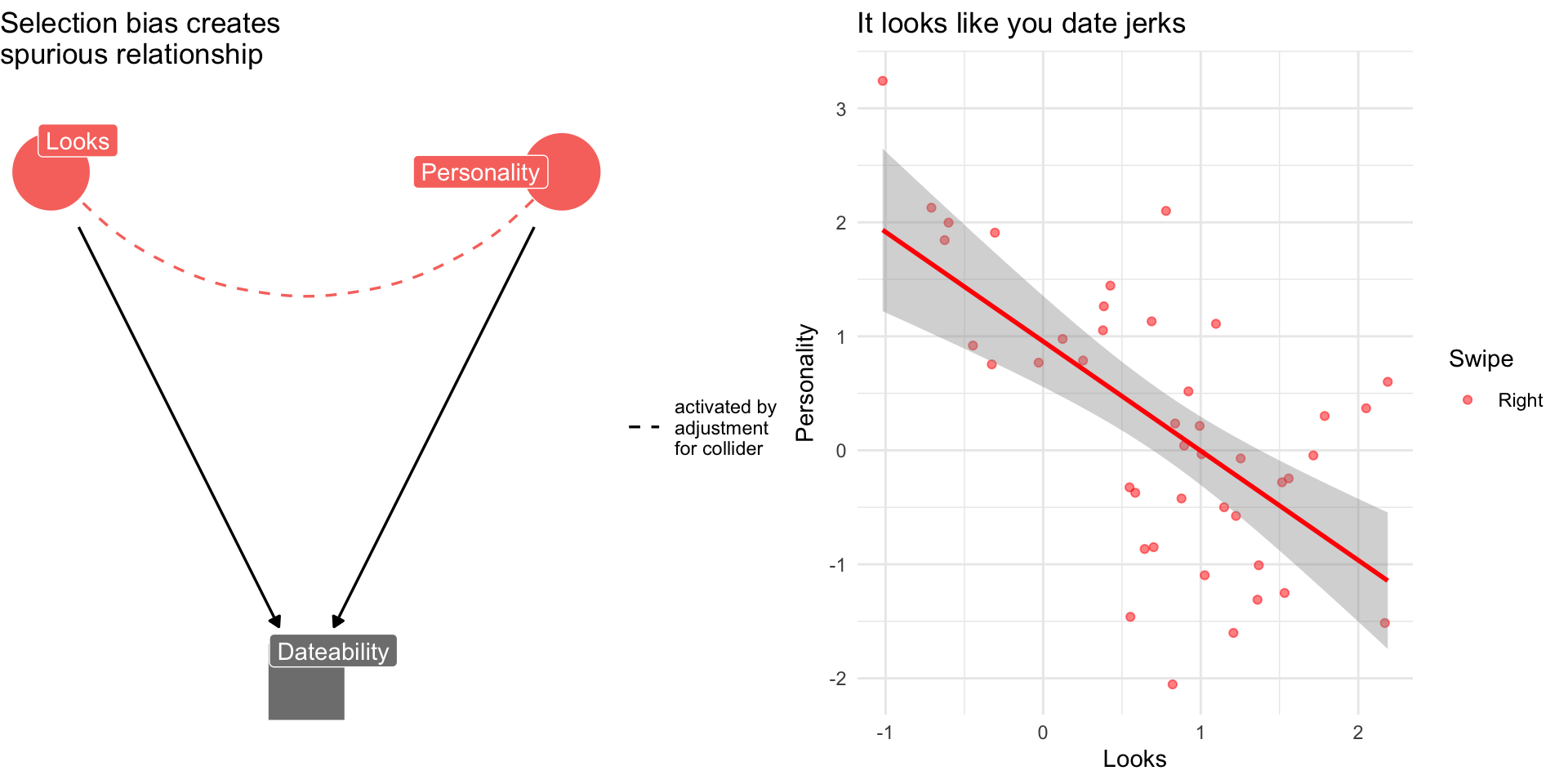

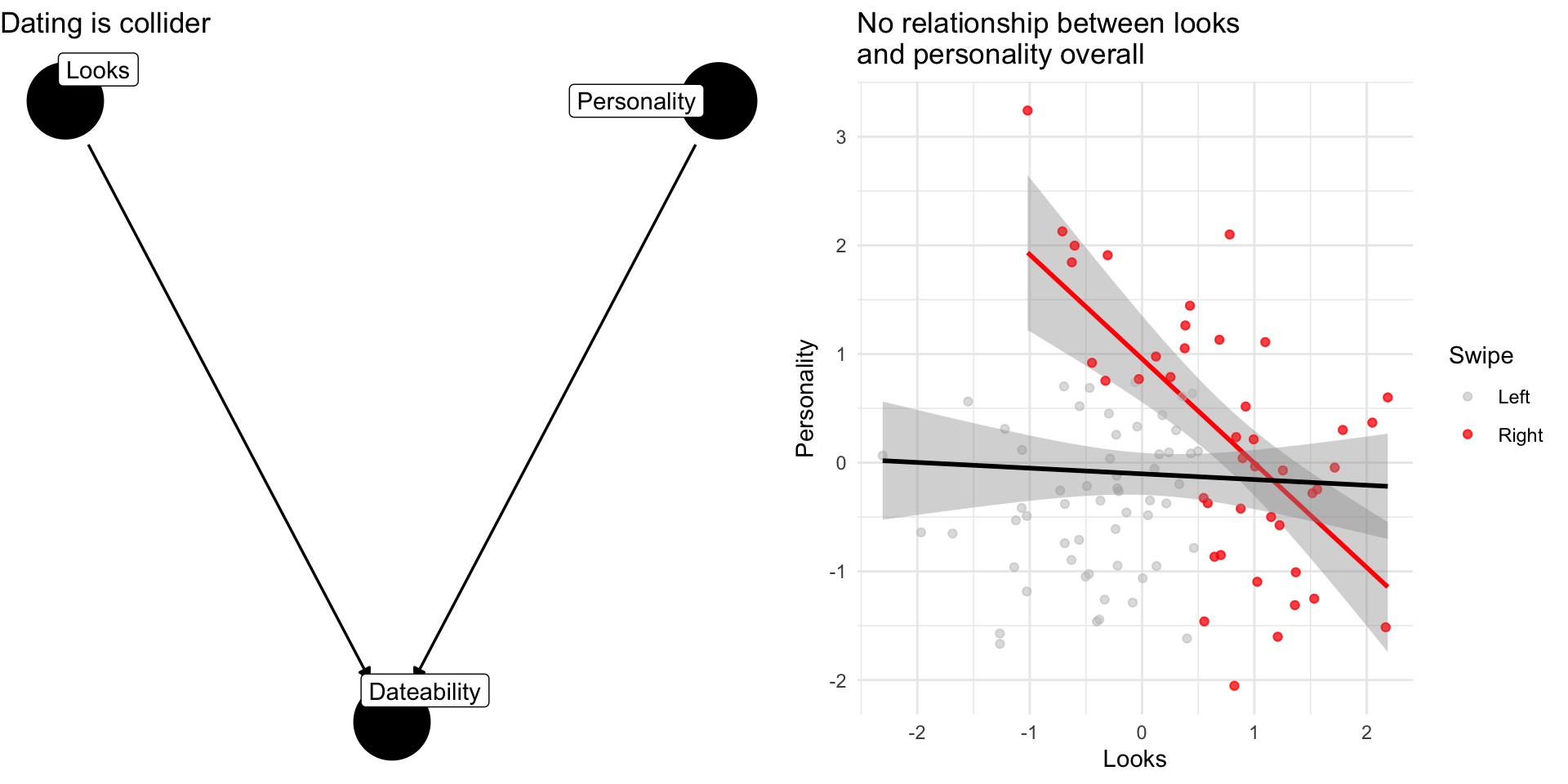

Collider Bias: The Dating Example

Why are attractive people such jerks?

Suppose dating is a function of looks and personality

Dating is a common consequences of looks and personality

Basing our claim off of who we date is an example of selection bias created by controlling for collider

Note

If you see a regression model that controls for everything and the kitchen sink without theoretical justification, we might worry about the potential for collider bias

When to control for a variable:

Causal Inference

Causal inference is about making credible counterfactual comparisons

In an experiment, researchers create these comparisons through random assignment

- Pro: Addresses concerns about selection bias

- Con: Do results generalize?

In an observational study, also attempt to make credible counterfactual comparisons through how they design their studies and analyze their data.

- Pro: May generalize better/greater ecological validity

- Cons: Greater potential for confounding and colliding bias

In general characteristics of the design are more important than the specifics variables in a given model for addressing bias

What’s the effect of the Harris-Trump Debate?

Take a few moments and consider the following:

What’s the effect of the debate on the 2024 election?

Why might the debate have an effect?

Why might the debate not have an effect?

How would you know? What types of comparisons would you make?

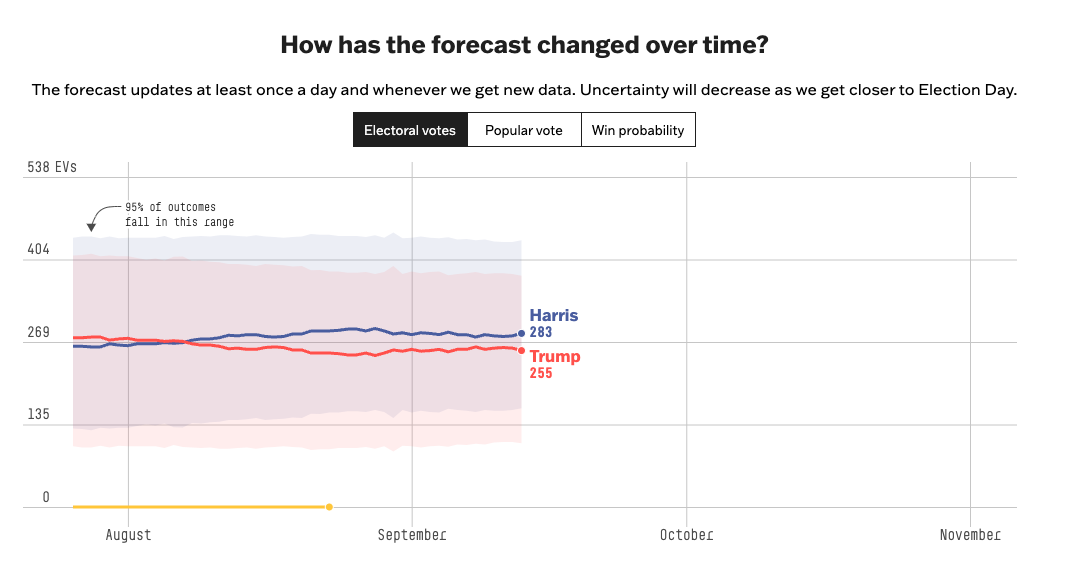

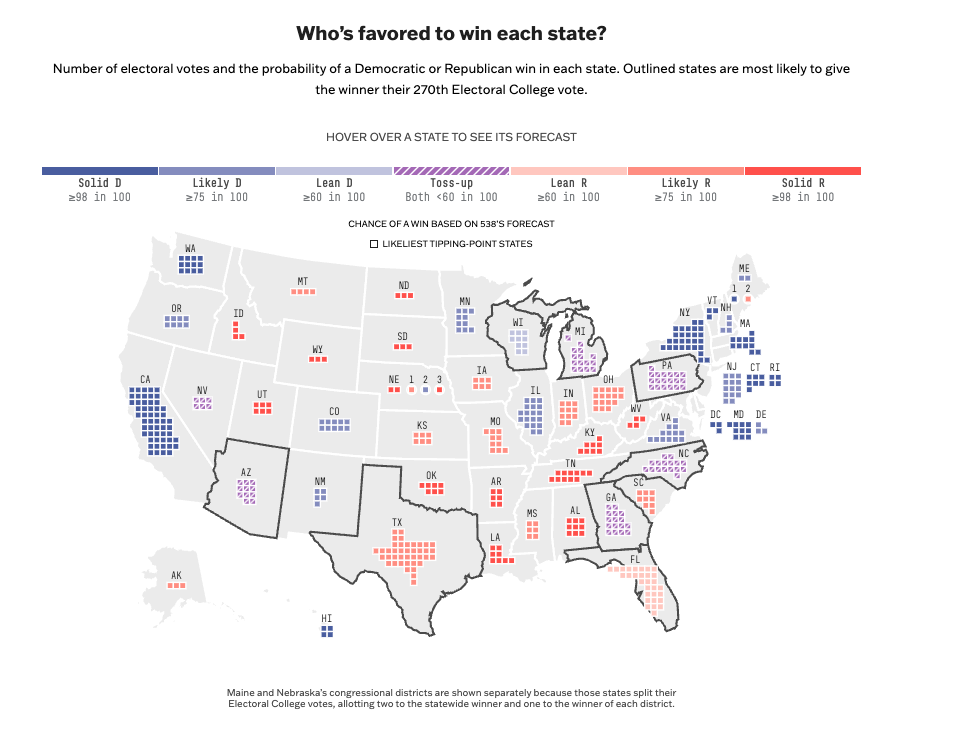

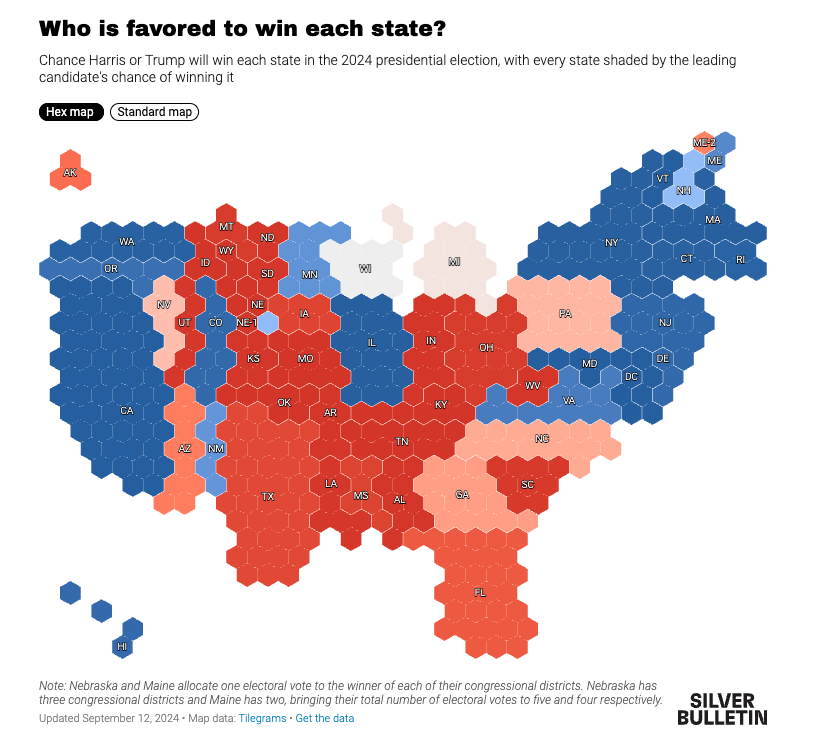

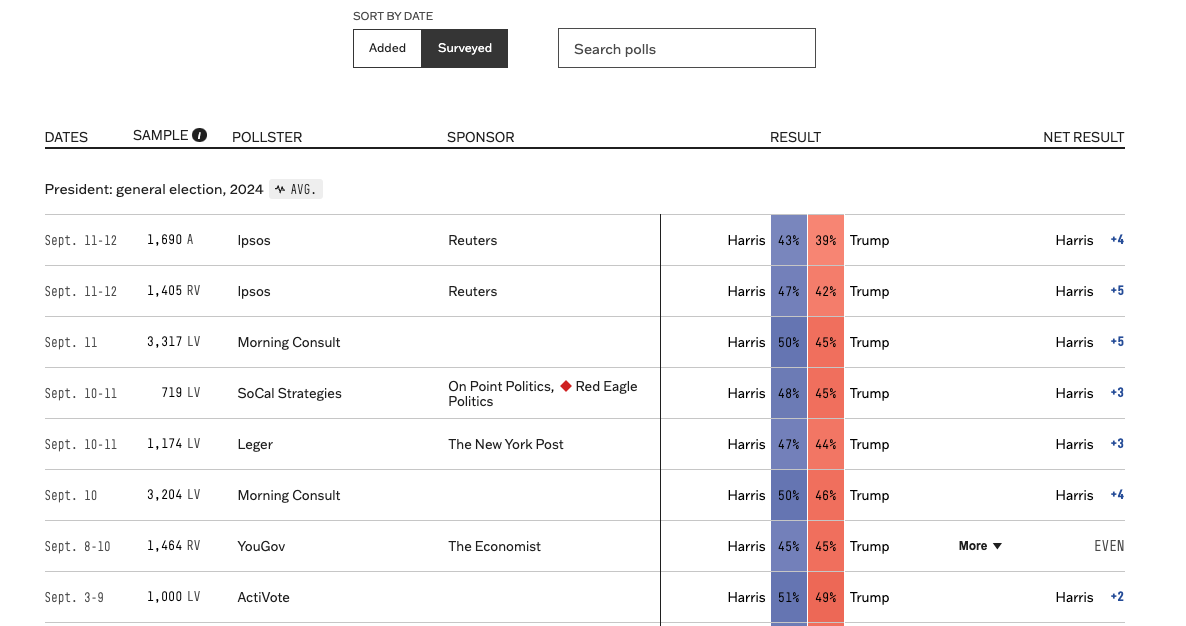

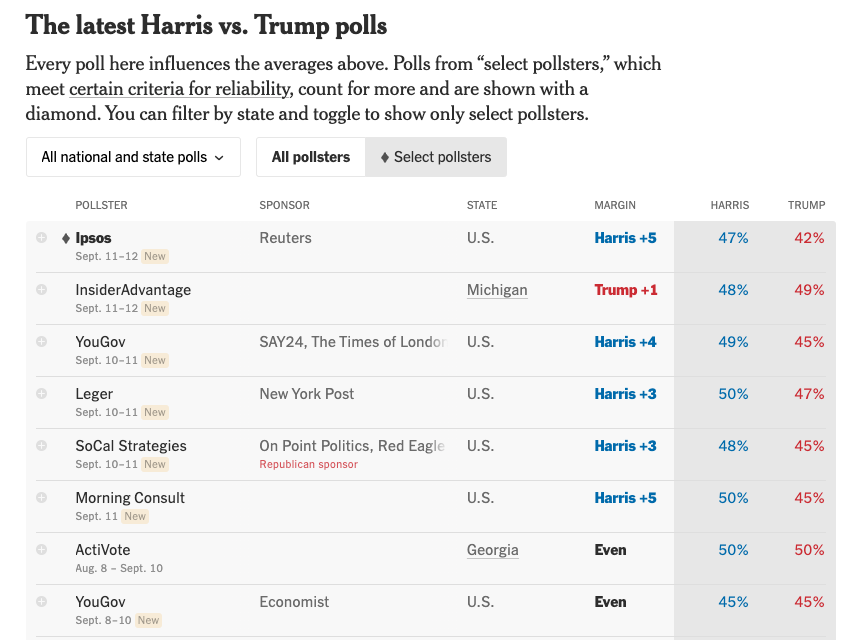

The Polls So Far

What to expect when:

- Day of:

- Snap polls (CNN)

- Online non-probability polls (ignore)

- This week:

- Online Panels (YouGov/IPSOS)

- Next week:

- More traditional RDD (NYT/Sienna)

- It will take a while to know the “effect” of the debates.

:::

Using polls to forecast elections

Forecasting Elections

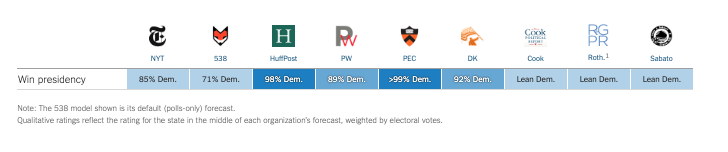

Election forecasts reflect varying combinations of:

- Expert Opinion

- Fundamentals

- Polling

Forecasts differ in the extent to which they rely on these components and how they integrate them in their final predictions

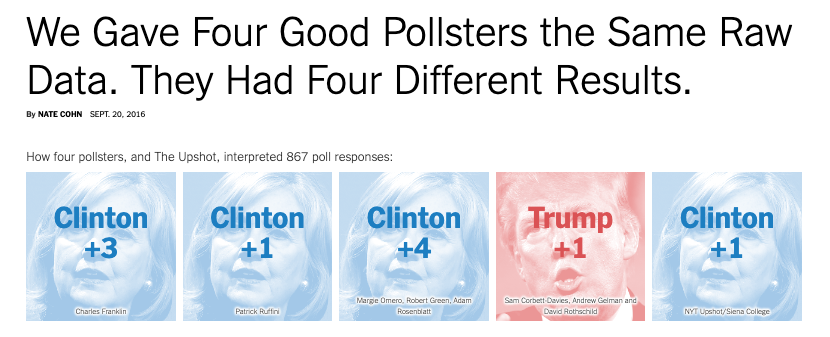

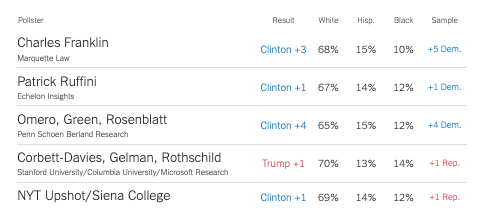

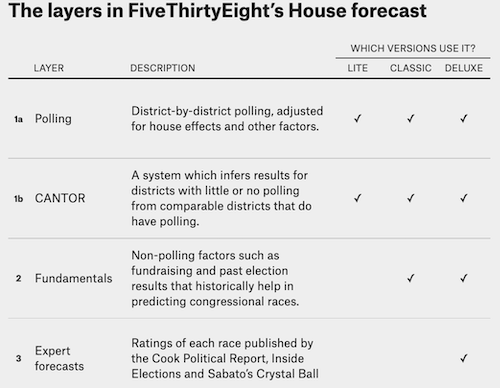

FiveThirtyEight’s Approach to Forecasting

Under Nate Silver…

Forecasting Elections with Polls

The preeminence of polling in modern forecasts reflects the success of Nate Silver and FiveThirtyEight in correctly predicting the 2008 (49/50 states correct) 2012 (50/50) presidential elections

Any one poll is likely to deviate from the true outcome

Averaging over multiple polls

the polls aren’t systematically biased

Concerns about the polls reflect the failure of such approaches to predict

Trump’s Victory in 2016

Strength of Trumps Support in 2020

Polling in Recent Elections

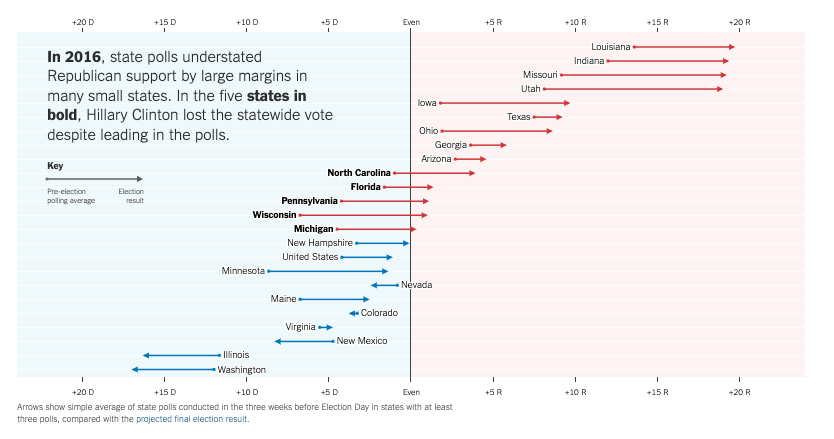

Polling the 2016 Election:

- The polls missed bigly

- National polls were reasonably accurate (Clinton wins Popular Vote)

- State polls overstated Clinton’s lead / understated Trump support

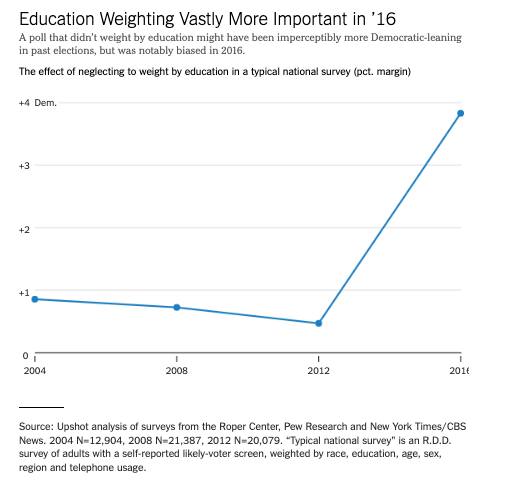

How did we get it so wrong in 2016?

Some likely explanations

Likely voter models overstated Clinton’s support

Large number of undecided voters broke decisively for Trump

White voters without a college degree underrepresented in pre-election surveys

Weighting for education

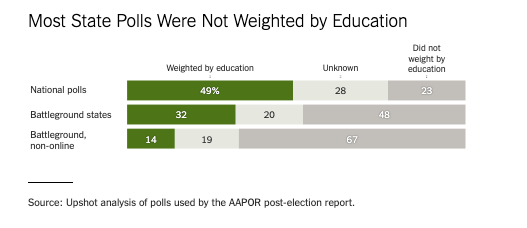

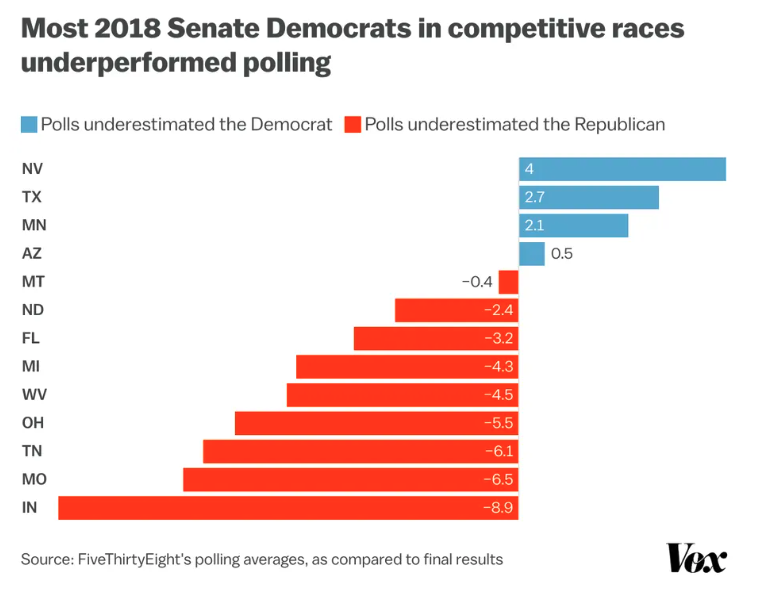

2018: A brief repreive?

Polls did a better job

- Most state polls weighted by education

- Underestimated Democrats in House and Gubernatorial races

- No partisan bias in Senate Races

Forecasts correctly call:

- Democratic House

- Republican Senate

However…

2020: Historic Problems, Unclear Solutions

Average polling errors for national popular vote were 4.5 percentage points highest in 40 years

Polls overstated Biden’s support by 3.9 points national polls (4.3 points in state polls)

Polls overstated Democratic support in Senate and Guberatorial races by about 6 points

Forecasts predicted Democrats would hold

- 48-55 seats in the Senate (actual: 50 seats)

- 225-254 seats in the House (actual: 222 seats)

2020: What Went Wrong

Unlike 2016, no clear cut explanations for what went wrong

Not a cause:

- Undecided voters

- Failing to weight for education

- Other demographic imbalances

- “Shy Trump Voters”

- Polling early vs election day voters

Potential Explanations

- Covid-19

- Democrats more likely to take polls

- Unit non-response

- Between parties

- Within parties

- Across new and unaffiliated voters

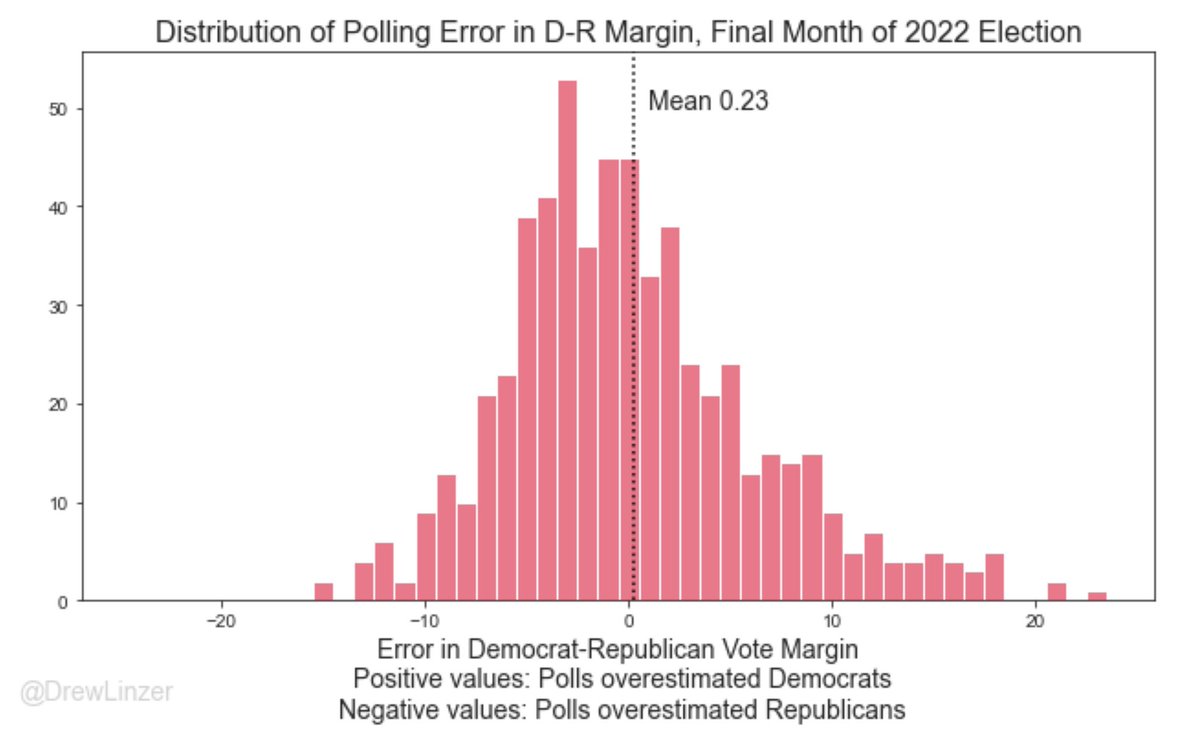

How the polls did in 2022

Overall, pretty good

Average error close to 0

Average absolute error ~ 4.5 percentage points

Some polls tended overstate Republican support (e.g. Trafalgar)

What do the polls say for 2024

Harris 2-3 point lead in popular vote

Math of electoral college favors Republican candidates

Polls will be wrong, but hard to predict the direction of the error (this is a good thing)

A lot can happen

Different:

- Polls

- Weighting of fundamentals and polls

- Different modeling assumptions (How to model convention bumps)

Who’s right?

For Next Week

References

POLS 1140